Learn how to detect AI cheating in technical interviews using behavioral cues, technical signals, and structured detection methods powered by Sherlock AI.

Abhishek Kaushik

Feb 5, 2026

AI tools have changed how technical work gets done, but they have also changed how candidates cheat during interviews. From AI generated code to real time assistance running on hidden devices, technical interviews are now one of the easiest places for AI misuse to go unnoticed. For example, a recent analysis of nearly 20,000 interviews found that more than 38% of candidates showed signs of cheating behavior, with technical roles showing even higher rates of misuse. About 61% of those flagged would have passed traditional screening without detection, highlighting how easily AI-assisted performance can slip through conventional evaluation.

Detecting AI cheating requires more than asking harder questions. It requires understanding how AI is used during interviews, recognizing behavioral and technical signals, and using the right detection mechanisms to separate real skill from artificial assistance.

What AI Cheating Looks Like in Technical Interviews

AI cheating occurs when candidates rely on generative AI tools during coding rounds, system design discussions, or technical problem solving without disclosure. This includes generating code, explanations, debugging suggestions, or even attending interviews using impersonation or AI manipulation.

Unlike traditional cheating, AI cheating often produces answers that appear clean, fast, and technically correct, making it harder to spot without structured detection.

Ways to Detect AI Cheating in Technical Interviews

AI cheating has changed the way technical interviews must be evaluated. While answers may appear correct, hidden AI assistance often creates detectable inconsistencies. The following methods highlight how interviewers can identify AI cheating during technical interviews.

1. Detecting AI Generated Code in Live Coding Rounds

AI generated code is commonly used during live technical interviews. While the solution may run successfully, detection depends on evaluating how the candidate interacts with the code.

The candidate struggles to explain the logic behind their solution.

They cannot justify why a specific algorithm or data structure was chosen.

Small changes or follow-up requirements cause long pauses or confusion.

Debugging becomes difficult because the candidate did not author the code.

Explanations sound generic and disconnected from the actual problem.

Example

A candidate submits an optimized solution but fails to explain time complexity or modify the code to handle a simple edge case.

2. Detecting Real Time AI Assistance Through Second Devices

Some candidates use AI tools on phones or secondary laptops while interviewing. Since this happens outside the interview platform, detection relies on behavioral patterns.

Repeated eye movement away from the primary screen.

Delayed responses after technical questions are asked.

Sudden improvement in answer quality without visible reasoning.

Inconsistent engagement during problem solving.

Response timing does not match the complexity of the question.

Example

The candidate pauses, looks away from the screen, and then delivers a polished answer that they cannot expand on further.

3. Detecting Hidden Browser Extensions and AI Overlays

AI powered browser extensions can provide real time hints or complete solutions during coding interviews. These tools leave detectable technical traces.

Frequent tab or window switching during coding tasks.

Large blocks of code appearing instantly through copy paste.

Long inactivity followed by rapid code insertion.

Abrupt improvement in code quality without explanation.

Typing patterns that do not reflect normal problem solving behavior.

Example

No typing activity for 30 seconds, followed by an entire function appearing in the editor at once.

4. Detecting Proxy Interviewing and AI Impersonation

Proxy interviewing and AI impersonation involve someone else attending the interview or manipulating audio and video. These cases are rare but highly impactful.

Facial movements that do not align with speech.

Voice tone or accent changes across interview rounds.

Different communication styles between interviews.

Inconsistent technical performance across stages.

Identity signals that do not remain consistent over time.

Example

A candidate performs exceptionally well in one technical round but struggles with basic questions in a follow-up interview.

5. Detecting AI Cheating Through In-Meeting Communication Signals

In-meeting communication offers another limited layer of visibility during Zoom based technical interviews, but it has important detection constraints.

Zoom can record in-meeting chat messages if chat recording is enabled.

Interview hosts can view public and private chat messages sent within the meeting.

This visibility is limited only to communication inside Zoom.

Candidates can still use external messaging apps or AI tools on other devices.

Zoom cannot detect communication happening outside its interface.

Example

Even if no suspicious chat activity appears in Zoom, a candidate may still be receiving AI assistance through a phone or external messaging platform.

Technical Signals That AI Cheating Is Happening

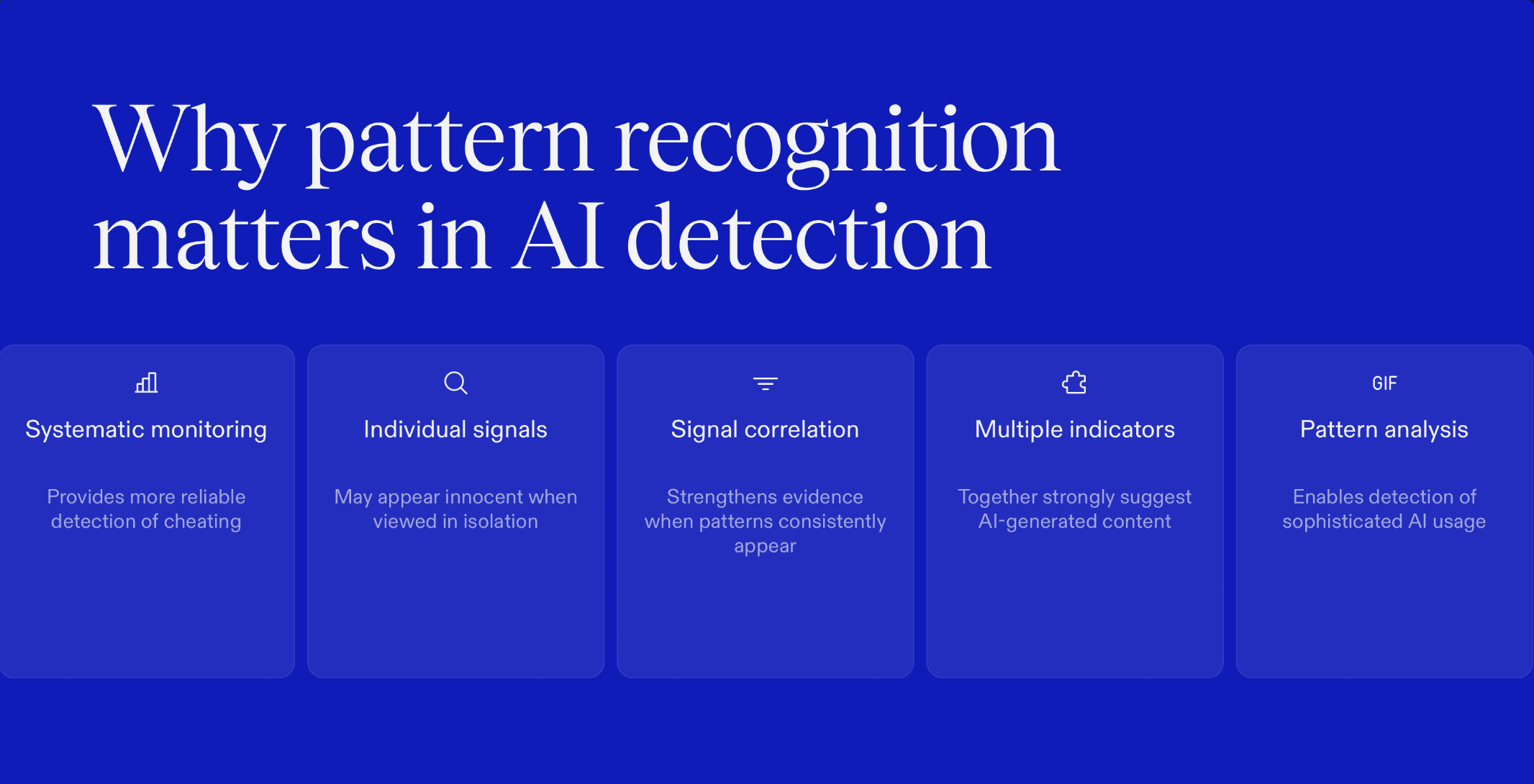

Behavioral cues are important, but technical interviews also generate system-level signals that can reveal AI misuse. These indicators provide objective evidence that external tools may be influencing a candidate’s performance.

Key technical signals to watch for

Frequent tab or application switching

Repeated switching between tabs or applications during a coding interview often indicates that the candidate is consulting external tools. This becomes more suspicious when switching occurs immediately after a question is asked or during complex problem-solving.Copy-paste activity

Large blocks of code or text appearing instantly suggest AI-generated content being pasted rather than written incrementally. Human candidates typically build solutions step by step.Typing speed mismatch

A mismatch between typing activity and solution complexity is a common indicator. Candidates may type very little yet produce complex solutions, or explain answers fluently with minimal interaction in the code editor.Screen focus loss

Repeatedly looking away from the main interview screen often suggests the candidate is reading AI-generated responses from another device or application.Combined signal patterns

Individually, these signals may appear harmless. When multiple indicators occur together, they strongly suggest AI cheating during technical interviews.

How Sherlock AI Detects AI Cheating in Technical Interviews

Sherlock AI is purpose built to identify modern hiring fraud, including AI assisted cheating that traditional interview methods often miss. It works alongside technical interviews to surface hidden signals that indicate external assistance, without interrupting the candidate experience.

1. Continuous Interview Activity Monitoring

Sherlock AI monitors interview activity in real time to detect patterns that suggest AI usage. This includes tracking tab switching, application switching, and unusual interaction behavior during coding or problem-solving tasks. Repeated context switching at critical moments is flagged as a potential risk signal.

2. Behavioral Pattern Analysis

Rather than relying on single red flags, Sherlock AI analyzes behavioral patterns across the entire interview. It evaluates response timing, engagement consistency, screen focus behavior, and interaction flow. When these patterns deviate from normal human problem-solving behavior, the system identifies them as anomalies.

3. Detection of AI Generated Code and Responses

Sherlock AI identifies characteristics commonly associated with AI generated output. This includes abrupt code insertion, copy-paste behavior, and response structures that do not align with real-time reasoning or explanation quality. These signals help differentiate genuine skill from AI assisted performance.

4. Identity and Presence Verification

To address proxy interviewing and impersonation risks, Sherlock AI verifies identity continuity throughout the interview process. It detects inconsistencies in presence, behavior, and interaction patterns that may indicate someone else is participating or AI manipulation is involved.

5. Risk Scoring and Actionable Insights

Instead of forcing recruiters to interpret raw data, Sherlock AI consolidates detection signals into clear risk indicators. These insights help hiring teams make informed decisions without relying on assumptions or subjective judgment.

6. Designed for Fair and Uninterrupted Interviews

Sherlock AI operates silently in the background, ensuring that honest candidates are not disrupted. Its detection approach focuses on patterns and evidence, helping recruiters maintain fairness while protecting the integrity of technical hiring.

Read more: How to Detect and Prevent Cluely AI in Interviews

Key Takeaways for Hiring Teams

AI cheating in technical interviews is subtle, scalable, and increasingly common. Candidates can rely on AI tools, hidden devices, or even impersonation techniques, producing answers that appear correct while masking their true skills.

To detect AI cheating effectively, hiring teams must focus on patterns rather than isolated events. Relying solely on manual evaluation is no longer sufficient. Structured detection approaches, powered by intelligent monitoring tools, ensure that hiring decisions are based on genuine technical ability, not AI assisted performance.

Final Thoughts

AI tools have revolutionized technical work, but their misuse during interviews can undermine hiring integrity. Organizations that fail to detect AI cheating risk onboarding candidates who cannot perform independently, which can lead to project delays, team inefficiency, and costly rehires.

Sherlock AI provides a comprehensive solution to this problem. By combining behavioral pattern analysis, technical signal monitoring, and identity verification, Sherlock AI enables recruiters to detect AI assisted cheating in real time without disrupting the candidate experience. Its risk scoring and actionable insights help hiring teams make confident, evidence-based decisions while maintaining fairness and transparency throughout the interview process.

By adopting detection-focused interview strategies and leveraging tools like Sherlock AI, organizations can protect the quality, fairness, and accuracy of their technical hiring process, ensuring that every candidate’s skills are evaluated authentically.