An overview of how AI affects interview fairness and why strong hiring depends on assessing reasoning, not surface-level polish.

Abhishek Kaushik

Dec 22, 2025

AI does not just help candidates.

It changes who has an advantage during interviews.

AI can:

Help candidates organize answers

Reduce anxiety

Support non-native speakers

But AI can also:

Hide the lack of fundamental skills

Reward memorization over reasoning

Enable proxy or coached participation

Make confident candidates look stronger than competent ones

The result:

Interviews can become less about skill and more about access to tools.

To keep interviews fair, we must evaluate thinking, not polish.

The Four Ways AI Can Create Unfairness in Interviews

1. It Amplifies Confidence Gaps

AI helps candidates speak smoothly, even when:

They lack direct experience

Their understanding is surface-level

They are repeating rehearsed narrative patterns

This gives:

Polished storytellers are an advantage

Over practitioners with deeper but less rehearsed experience

AI‑based hiring systems can shape the candidate experience by favouring those who present their background in polished, structured formats over those with deeper but less rehearsed experience.

Real Example

A strong engineer who communicates plainly may score lower than:

A weak engineer reading a polished, AI-generated behavioral script.

This is a fairness distortion.

2. It Rewards Memorization Instead of Understanding

AI coaching centers now sell:

System design scripts

Leadership behavior stories

“Perfect interview answers”

These are not:

Fake

Dishonest

by themselves

But when a candidate cannot:

Paraphrase in their own words

Explain tradeoffs

Adapt when assumptions shift

Then the answer is not their own thinking.

Interview signal becomes performance, not competence.

3. It Enables Background or Proxy Participation

Remote interviews make it easy to:

Have another person feed answers through a second device

Receive whisper coaching

Use face or voice masking software

This disadvantages:

Honest candidates

Internal candidates competing for promotions

Early career talent without access to coaching networks

Sherlock AI fixes this automatically through:

Identity consistency verification

Voice match detection

Behavioral reasoning patterns

This restores fairness.

4. It Overweighs Language Skill and Underweighs Reasoning

Without guardrails, AI makes answers:

Longer

Cleaner

More structured

But longer answers are not better answers. Structured language is not real understanding.

Research on bias in AI-enabled hiring shows that AI often rewards longer, cleaner answers, confusing polish for understanding. Interviews need to focus on how candidates think, decide, and adapt, not how smoothly they speak.

A fair interview evaluates:

How the candidate reasons under constraints

How do they make decisions

How do they update their approach when something changes

These signals are hard to fake with AI.

Read more: The Security Impact of AI-Assisted Interviews

So the Problem Is Not the AI

The problem is with the interview design:

Measures fluency, not reasoning

Measures storytelling, not decisions

Measures confidence, not ownership

Measures output, not adaptability

AI is simply a multiplier:

It multiplies good reasoning when used well

It multiplies fraud or performance theater when misused

How to Restore Fairness

Recruiters and interviewers can level the playing field by:

Shift | Old Way | Fair and AI-Aware Approach |

|---|---|---|

Understanding | “Tell me your story.” | “Paraphrase the problem in your own words.” |

Skill signal | Correct answers | Tradeoff reasoning and constraints adaptation |

Code evaluation | Final output | Debugging and the iteration process |

Identity | Assumed | Verified across steps |

These do not make interviews harder. They make interviews more real.

What Sherlock AI Adds

Sherlock AI restores fairness by:

Confirming that the person interviewing is the applicant

Detecting AI-coached or proxy answers through reasoning inconsistency

Highlighting behavior mismatches in real time

Helping interviewers evaluate thinking patterns, not word polish

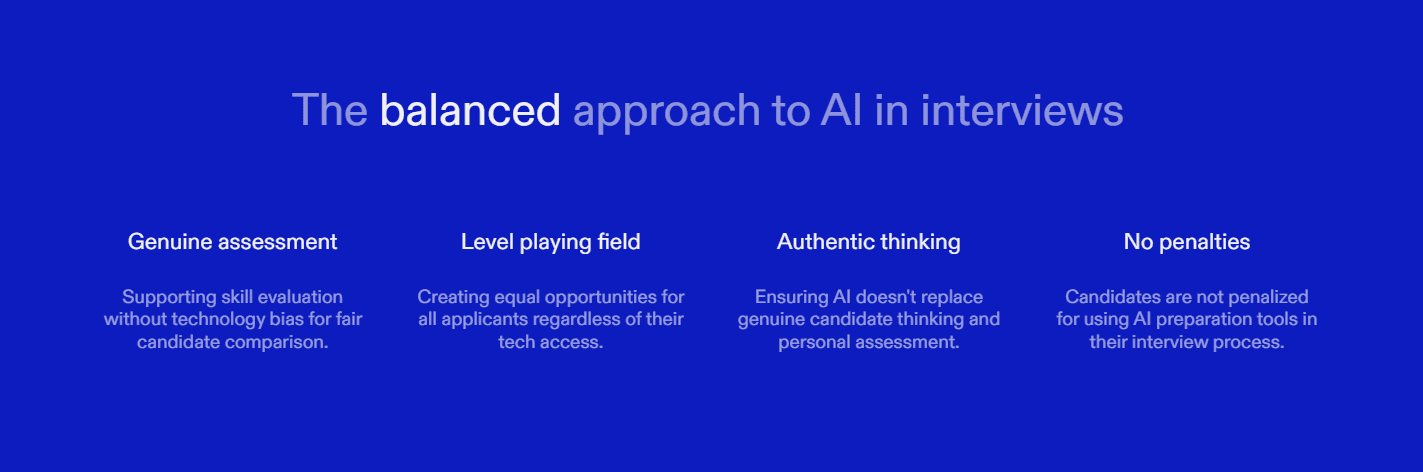

Sherlock AI does not punish AI usage. It ensures AI does not replace the candidate's thinking.

Conclusion

AI does not automatically make interviews unfair. It makes traditional interview scoring methods unreliable.

To keep hiring fair:

Evaluate reasoning, not fluency

Evaluate adaptability, not confidence

Verify identity, not assume it

Ask questions that require thinking, not repeating

Fairness is not about banning AI. Fairness is about ensuring the candidate’s mind is what is being evaluated. This is how hiring remains accurate and ethical in the AI era.