Back to all blogs

Clear methods for identifying ParakeetAI usage during interviews and safeguarding hiring decisions from AI-driven deception.

Abhishek Kaushik

Jan 7, 2026

Interviews were designed to evaluate how candidates think, decide, and learn from real experience.

That premise is now broken.

In a recent survey of professionals, 20% admitted to secretly using AI tools in interviews, and 55% agreed that AI-assisted interviewing has become the norm.

Tools like ParakeetAI can generate answers in real time during live interviews, not as preparation, but as active participation. To interviewers, everything appears normal: candidates sound confident, structured, and thoughtful. But what’s being evaluated is no longer the candidate’s reasoning. It’s the AI’s ability to produce a polished response on demand.

This creates a serious risk for hiring teams. When interviews reward fluency over decision-making and ownership, honest candidates are disadvantaged and weak hires slip through.

What is ParakeetAI?

ParakeetAI represents a category of AI tools designed to actively assist candidates during live interviews. Unlike traditional interview preparation tools, these systems operate in real time.

They sit in the same family as other live interview copilots that don’t just prep candidates, but actively shape answers as questions are being asked.

To the interviewer, the interaction appears normal. The candidate sounds articulate, confident, and thoughtful. However, much of the reasoning and response construction is being handled by the AI, not the candidate.

Why traditional interview signals fail

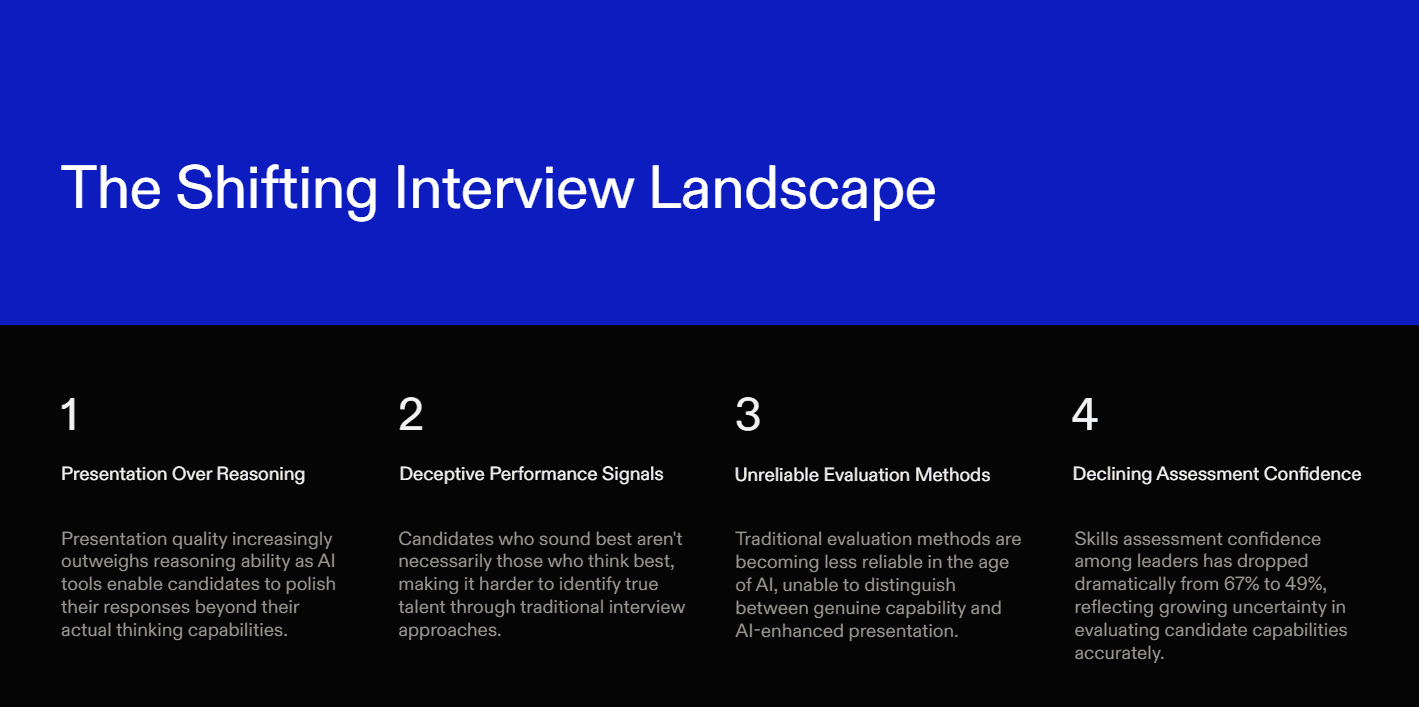

Traditional interviews rely on signals such as fluency, confidence, and structured storytelling as proxies for competence. These signals once correlated reasonably well with a candidate’s ability to think, decide, and reflect on real experience. Real-time AI breaks that link.

Gartner’s 2025 analysis found that between 2024 and 2025, the share of leaders who said assessing real skills is difficult jumped from 19% to 34%, overall confidence in hiring accuracy dropped from 67% to 49%, and nearly 71% of leaders say AI makes it harder to evaluate technical skills accurately.

AI can now reproduce these signals without underlying understanding:

Fluency without comprehension

AI can generate smooth, well-organized answers without grasping the trade-offs, constraints, or rationale behind them.Confidence without judgment

AI-generated responses sound decisive even when the logic is shallow or generic, causing confidence to be mistaken for competence.Storytelling without ownership

Behavioral narratives and project walkthroughs can be scripted in real time. Candidates may sound like decision-makers despite having played limited or peripheral roles.Adaptation without reasoning

Standard follow-up questions are predictable. AI can respond quickly and coherently while avoiding genuine, context-specific decision-making.

As a result, interviews increasingly reward presentation quality over reasoning, ownership, and judgment. The candidate who sounds the best is no longer reliably the candidate who thinks the best.

That’s the core interview integrity problem many remote-first teams are running into today.

The Core Risk: Reasoning Substitution

The primary risk of Parakeet-style tools isn’t AI usage itself. It’s that AI can substitute for the candidate’s own reasoning during the interview.

Interviews are designed to surface how people think, make decisions, and navigate trade-offs. When answers are generated in real time by AI, those signals collapse. What remains is fluency without judgment and confidence without ownership.

Candidates may sound competent, but the underlying decision logic is missing. Trade-offs are flattened, constraints disappear, and idealized answers replace the messy realities of real work. This creates a false sense of capability, even in areas where the candidate has little direct experience.

Over time, this shifts interviews from evaluating thinking to evaluating presentation, increasing the risk of hiring people who sound right but cannot reason independently when faced with real problems.

High-Signal Interview Tests That Break AI Assistance

Detecting AI assistance isn’t about catching candidates cheating. It’s about designing interviews that require real reasoning, ownership, and decision-making; signals that real-time AI cannot reliably substitute.

The following interview tests shift evaluation away from polish and toward verifiable thinking.

1. Ownership Challenges

Ask candidates to separate what they personally decided from what the team executed.

For example: “Which specific decisions did you personally make, and what trade-offs did you consider?”

AI can generate credible summaries of team work, but it struggles when pressed for granular, personal decision-making and rationale.

2. Constraint Injection

Introduce a new limitation or change partway through a scenario.

For example, add a budget cut, resource constraint, or regulatory requirement to a system the candidate just described.

Strong candidates can adapt and explain how their priorities shift. AI responses tend to revert to generic, idealized solutions that ignore real trade-offs.

3. Retrospective Reflection

Ask questions such as: “What would you do differently?” or “Where did this decision go wrong?”

Genuine experience includes mistakes, reversals, and learning. AI-generated answers are often polished and risk-free, avoiding concrete failures or uncomfortable trade-offs.

4. Timeline Reversals

Ask candidates to explain events out of order or explore counterfactuals: “What broke first?” or “What would have happened if this decision had been delayed?”

This tests causal understanding. AI often struggles with non-linear reasoning tied to real project dynamics.

5. Assumption Probing

Follow up by asking: “What assumptions did you make?” and “Which ones turned out to be wrong?”

Strong candidates can articulate context-specific assumptions and trade-offs. AI answers tend to rely on generic best practices rather than lived constraints.

Practical Tip: Combine multiple tests in a single conversation. Start with ownership, inject a constraint, then ask for reflection. This layered approach quickly reveals whether reasoning is authentic or merely well-presented.

By using these high-signal tests, interviews shift from evaluating how good answers sound to evaluating how decisions were actually made. They also expose many of the same telltale patterns you see when candidates lean on scripted or pre-generated responses.

Interview Design Patterns That Prevent AI Abuse

The goal isn’t to catch candidates using AI. It’s to structure interviews so that only authentic thinking, ownership, and adaptability can succeed; regardless of polish or rehearsal.

1. Branching Conversations

Linear Q&A is predictable and easy for AI to handle. Instead, let the conversation evolve based on candidate responses.

Example: If a candidate describes a project decision, follow up with: “How would you adjust if the team had half the resources?”

Dynamic paths force real-time reasoning and expose gaps in AI-generated answers.

2. Decision-Focused Evaluation

Focus on why candidates made choices, not just what they did.

Example: “Why did you choose this approach over alternatives, and what impact did it have?”

This uncovers critical thinking that AI cannot convincingly replicate in context.

3. Mini Case Simulations

Present short, realistic scenarios requiring prioritization, problem-solving, or constraint navigation.

AI can offer generic solutions, but struggles when scenarios evolve in real time or require nuanced judgment.

4. De-Emphasize Style and Fluency

Evaluate reasoning over presentation. Candidates who pause, think aloud, and explore trade-offs reveal true decision-making. Polished or charismatic answers no longer indicate competence.

5. Layered Questioning

Combine multiple patterns in one flow.

For example, start with a behavioral question, inject a constraint, and follow with reflective analysis. This makes it difficult for AI to maintain consistent, plausible answers across multiple dimensions.

Practical Tip: Document answers carefully. Even small inconsistencies or vague explanations are useful signals. Structured notes and evidence-based scoring amplify your ability to separate authentic reasoning from AI polish.

Outcome: By integrating these patterns, interviews become resilient to AI assistance, emphasizing thinking, adaptability, and ownership rather than presentation quality.

For teams formalizing their toolstack, there’s now an entire category of interview cheating detection tools built for these AI-era risks. 👉 10 Best Interview Cheating Detection Tools

The Future of Interviews in an AI-Assisted World

AI is no longer a distant possibility. It is already reshaping how candidates prepare and perform in interviews. The future of hiring won’t be about banning AI, but about designing processes that require genuine human thinking.

Interviews will increasingly emphasize:

Reasoning under uncertainty – Evaluate options, weigh trade-offs, and make real-time decisions.

Ownership and accountability – Focus on who actually drove outcomes, not just participation.

Dynamic, adaptive questioning – Branching conversations, constraint changes, and probing follow-ups will become standard.

Auditability and fairness – Tools like Sherlock AI can capture reasoning, identify gaps, and support evidence-based decisions.

Companies that embrace AI-resilient interview design will be best positioned to hire candidates who truly think, decide, and perform; ensuring fairness, trust, and long-term success.