Back to all blogs

Learn why interview integrity matters today. Explore how AI, rehearsed answers, and remote hiring affect quality and costs, and how to design interviews that reveal real candidate ability.

Abhishek Kaushik

Jan 15, 2026

Interviews are supposed to show who can actually do the job. But that assumption is breaking down fast. Today’s remote interviews and digital tools make it far easier for candidates to deliver polished answers that don’t reflect real ability.

This matters because the cost of bad hires is large and measurable. A bad hire can cost a company up to 30% of that employee’s first‑year salary in lost productivity, retraining, and turnover costs.

Employers increasingly suspect candidate deception: 59% of hiring managers have suspected AI‑driven misrepresentation during interviews, and only 19% feel confident their processes can reliably catch fraud.

The result?

Companies spend more time and money hiring, but many feel less confident in their decisions. Interview integrity is no longer a philosophical concern, it is a measurable business risk. To hire well in 2026, organizations must rethink what they evaluate and how they measure it.

This guide explains why interview integrity is eroding, what that means for outcomes, and how teams can restore confidence without hurting candidate experience.

The Breakdown of the Interview as a Trust Signal

Interviews used to work because candidates had to think on their own. They explained decisions, solved problems live, and responded without outside help. This made interviews a practical way to assess skill and readiness.

That is no longer the case.

Today, interview performance is often shaped by AI assistance, shared prompts, and rehearsed answers. Many candidates deliver polished responses that look strong but do not reflect real understanding or ability.

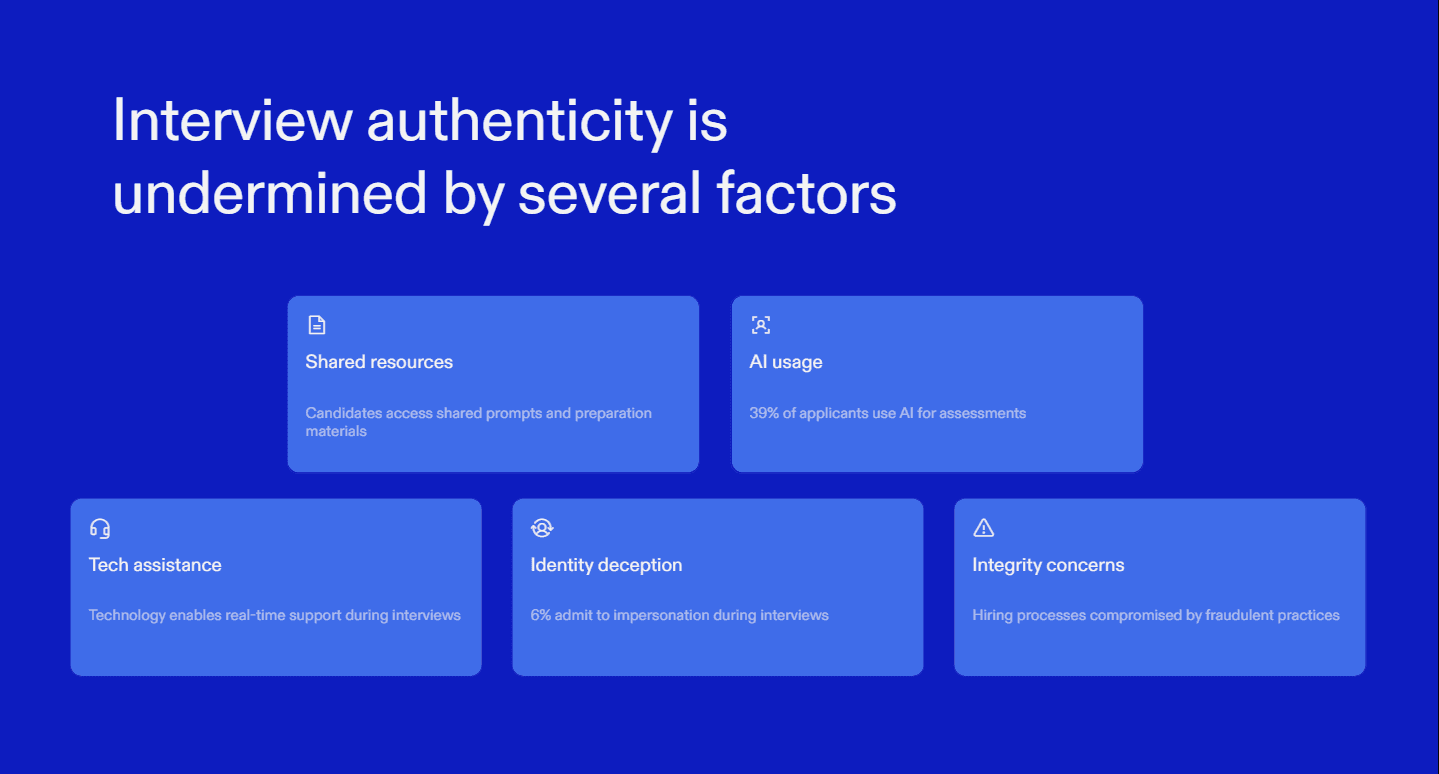

In a Gartner survey, 39% of applicants said they used AI to generate parts of their application or assessment, and 6% admitted engaging in interview fraud such as impersonation or proxy interviews.

This is why good answers no longer equal genuine capability:

AI-generated explanations can sound correct without deep knowledge

Rehearsed responses hide how candidates actually think

Live assistance masks problem-solving gaps

Predictable questions are easy to prepare for and game

The gap shows up after hiring:

Strong interview performers struggle with real work

New hires need constant guidance

Managers lose confidence in interview outcomes

The issue is not just cheating. Interviews have lost their value as a trust signal. Before adding more controls or tools, hiring teams need to accept that traditional interviews now measure presentation more than real capability.

Integrity Failures Are a Business Risk, Not an Ethics Issue

Interview integrity is often treated as a question of ethics or candidate behavior. In reality, it is a measurable business risk. When interviews fail to assess real ability, the cost shows up clearly in hiring metrics and team performance.

Research shows hiring mistakes are common and costly:

74% of employers admit they’ve hired the wrong person, and a bad hire can cost about $14,900 on average in lost productivity, training, and turnover costs.

A bad hire can cost a business up to 30% of that employee’s first‑year salary when you include recruitment, onboarding, and lost productivity.

In many companies, a single mis‑hire results in lost productivity, training and re‑hiring time, and morale issues that ripple across teams.

Interview fraud and assisted performance directly affect the business in measurable ways:

Mis‑hires increase hiring costs through repeated recruiting, onboarding, and training

Re‑hiring cycles slow hiring velocity, keeping roles open longer and delaying project delivery

Productivity drops when new hires cannot operate independently

Managers spend more time correcting performance instead of building and shipping work

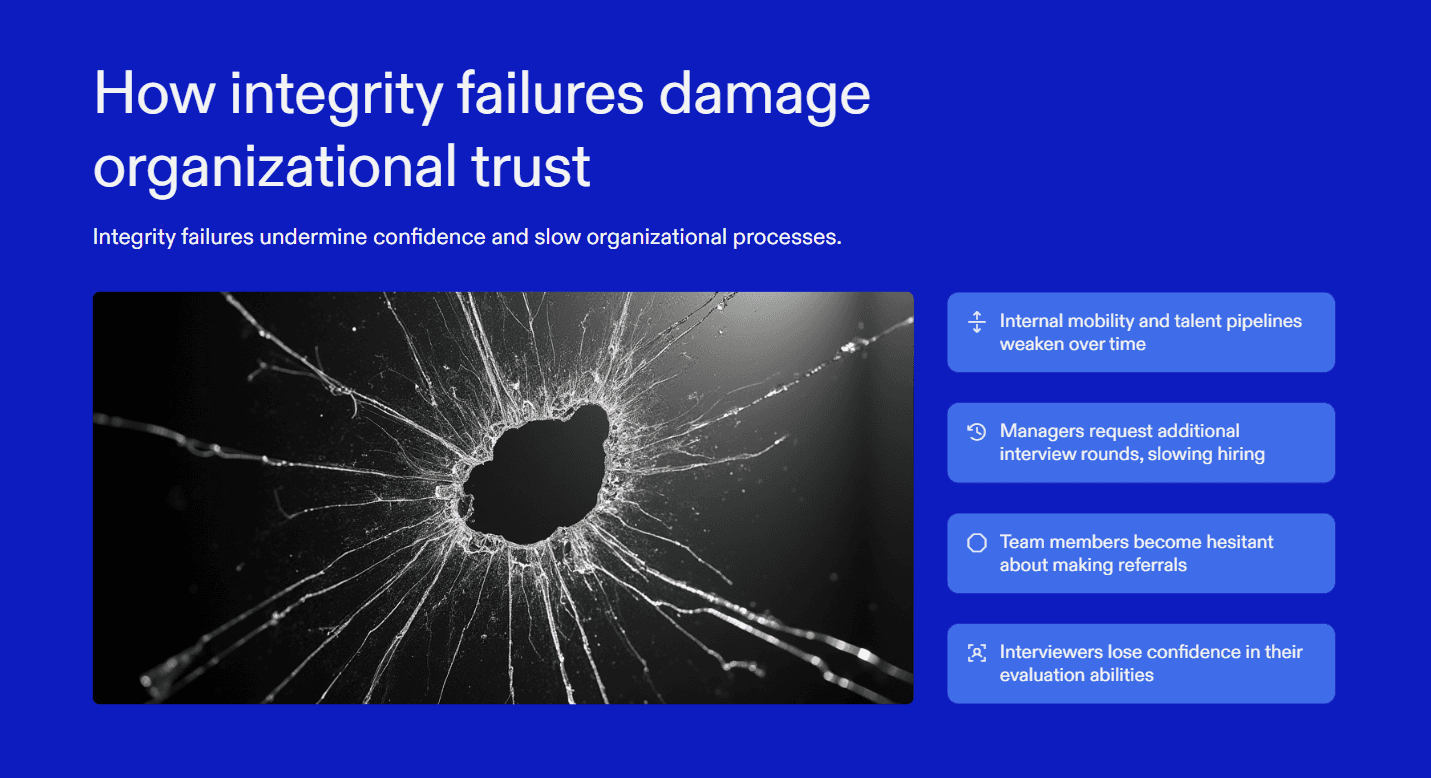

The impact does not stop with a mis‑hire. Integrity failures damage trust across the organization:

Interviewers lose confidence in their evaluations, leading to longer and more redundant processes

Managers push for additional interview rounds, slowing time to hire further

Teams become cautious about referrals and internal mobility, weakening talent pipelines

These issues scale faster in remote and high-volume hiring. Remote interviews reduce visibility into candidate behavior, while volume hiring amplifies small integrity gaps into large operational problems. A few weak signals per hire can turn into dozens of costly mistakes across a quarter.

This is why interview integrity should not be framed as a moral debate. It is a measurable business problem tied to cost, speed, and performance. Treating it as such is the first step toward fixing it.

Where Traditional Interview Design Fails Against Modern Cheating

Most interview formats were built when interviews were high-friction by default. Candidates had limited ability to prepare for exact questions, and real-time assistance was rare. Today, hiring teams are still using the same formats, even though the environment around interviews has completely changed.

Below is where traditional design breaks in real-world hiring scenarios.

1. Structured interviews are optimized for repeatability, not authenticity

Structured interviews help reduce interviewer bias, but they also create predictable patterns. In large companies and high-volume hiring, the same question banks are reused across teams and roles.

Questions like “How would you design a scalable API?” or “Walk me through your approach to debugging” appear across companies and interview prep sites

Candidates learn what “good” answers sound like rather than developing their own reasoning

AI performs especially well when questions expect structured, step-by-step responses

In practice, interview success becomes a function of familiarity, not competence.

2. Behavioral questions test recall and polish, not real ownership

Behavioral interviews assume candidates will describe real experiences honestly. In reality, these questions are often answered with rehearsed narratives.

In many cases, those narratives are partially scripted or AI-polished to sound more impressive than the underlying experience.

Prompts like “Tell me about a time you led a difficult project” are practiced extensively

Candidates combine real experiences with generic phrasing to sound credible

Interviewers rarely have enough context to verify depth or personal contribution

This leads to hires who communicate confidently but struggle when faced with responsibility or accountability on the job.

3. Case studies and coding rounds reward answers, not judgment

Case interviews and coding exercises were meant to reveal problem-solving ability. Today, they often measure output quality instead.

AI can propose frameworks, tradeoffs, and edge cases instantly

Candidates may reach correct solutions without understanding constraints or implications

When asked follow-ups like “What would you change if traffic doubled?”, gaps quickly appear

Teams often discover too late that the candidate struggles with decision-making once variables change.

4. More interview rounds create false confidence

When hiring teams sense interviews are failing, they respond by adding more steps.

Extra rounds increase time-to-hire and candidate drop-off

Well-prepared candidates continue to succeed across rounds

Interviewers feel reassured by volume, even though each round repeats the same weaknesses

The process becomes longer, not better. That’s part of why many teams are now adding interview integrity tools instead of just more rounds.

Designing Interviews That Reward Authentic Thinking Over Perfect Answers

Cheating becomes effective when interviews reward clean answers, confident delivery, and speed. The fix is not stricter rules. It is redesigning interviews so external help adds little value. When interviews focus on how candidates think, reason, and adapt, assisted performance breaks down naturally.

Below is a practical framework used by high-performing hiring teams to restore real signal.

1. Shift evaluation from answers to decision-making process

In real jobs, there are no perfect answers. What matters is how decisions are made with incomplete information.

Instead of asking:

“What is the best approach to solve this?”

Ask:

“What assumptions are you making?”

“What would make this approach fail?”

“What would you test first if this went to production?”

Strong candidates expose their thinking step by step. Assisted answers often sound complete but struggle to justify tradeoffs or explain second-order effects.

2. Interrupt rehearsed responses with live reasoning checks

Prepared answers follow a script. Real thinking does not.

Practical techniques interviewers use:

Pause the candidate mid-explanation and ask them to re-explain the same idea in simpler terms

Change a key constraint halfway through, such as budget, scale, or timeline

Ask “What would you do differently if this decision failed last quarter?”

Candidates who truly understand the problem adapt quickly. Those relying on memorized or assisted responses often hesitate, repeat themselves, or give generic statements.

3. Use context switching to test real experience

AI performs best within a single, well-defined context. Real work rarely stays there.

Examples:

After a system design discussion, ask how the same design would change for a smaller team

After a coding solution, ask how they would explain it to a non-technical stakeholder

After a leadership scenario, ask how their approach changes with a junior vs senior team

This reveals whether knowledge is transferable or surface-level.

4. Replace “got the answer” with “handled ambiguity well”

Many interviews reward closure. Real work rewards judgment under uncertainty.

Interviewers should look for:

Willingness to ask clarifying questions

Comfort saying “I don’t know yet” and outlining next steps

Ability to prioritize when tradeoffs are unclear

Candidates with real experience lean into ambiguity. Assisted responses often rush toward confident but shallow conclusions.

5. Use monitoring and detection as signals, not verdicts

Monitoring tools are most effective when they inform interviewer behavior.

Unusual pauses or gaze shifts can prompt deeper follow-ups

Rapid, highly polished answers can trigger probing into reasoning

Environmental signals can guide where to apply pressure

Tools like Sherlock AI are designed to work in this supportive role. Instead of blocking candidates or making hiring decisions, they surface behavioral and environmental signals in real time. This allows interviewers to validate authenticity through smarter questioning, not assumptions.

👉 Sherlock AI (Anti-Interview-Fraud) Security Overview

Conclusion

Interview integrity is no longer about catching bad behavior. It is about restoring trust in hiring decisions. As interviews become easier to game, relying on polished answers and familiar formats leads to weaker signal and higher risk.

The path forward is better design. Interviews that test real thinking, adaptability, and decision-making naturally reduce the impact of assistance and rehearsed responses.

When supported by thoughtful monitoring, not rigid enforcement, hiring teams can make confident decisions again. Integrity stops being a concern to manage and becomes a quality the interview itself reveals.