Back to all blogs

Learn how to detect and prevent Interview Coder in interviews, ensuring fair hiring and evaluating real candidate skills.

Abhishek Kaushik

Jan 7, 2026

Hiring teams rely on technical interviews to understand how a candidate approaches problems. The goal is not perfect code, but clear thinking, logical trade-offs, and the ability to work through uncertainty. That expectation is becoming harder to trust.

Live coding tools like Interview Coder can generate solutions, suggest fixes, and guide candidates step by step during an interview. The interviewer sees a confident candidate writing solid code but cannot see the external help driving those decisions.

The real risk is not cheating alone. It is mis-hiring. According to the U.S. Department of Labor, a bad hire can cost up to 30% of that employee’s first-year salary. Some human resources agencies estimate the cost to be higher, ranging from $240,000 to $850,000 per employee.

To keep interviews fair and reduce mis-hires, companies need to understand how Interview Coder is used and why traditional interviews fail to catch it. More importantly, they need practical ways to detect and prevent AI-assisted coding, ensuring interviews once again measure real ability.

What Is Interview Coder

Interview Coder is an AI-powered tool that helps candidates during live technical interviews by generating code and explanations in real time. It is designed to run alongside coding sessions so that users can get on-the-fly suggestions and support while solving coding problems.

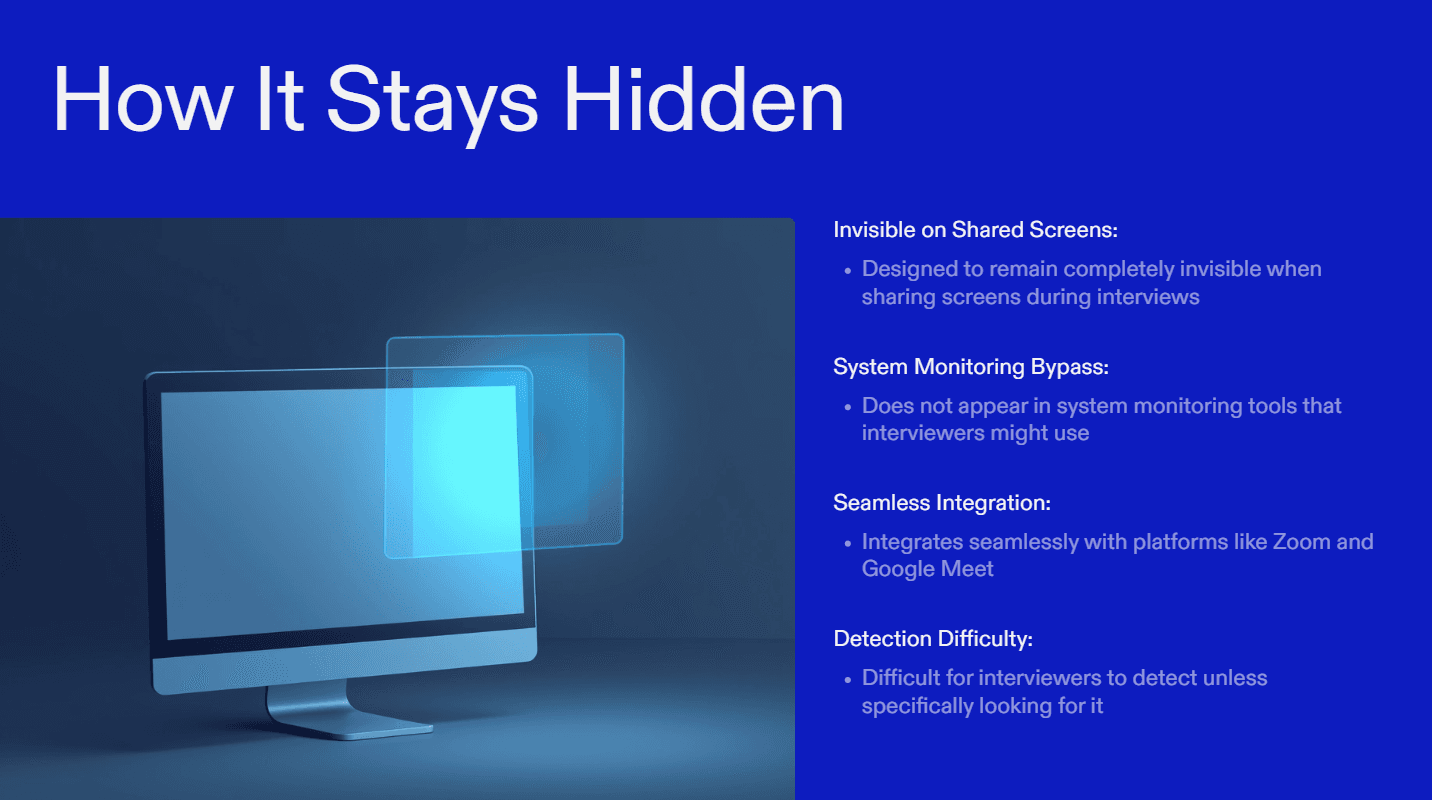

Unlike regular interview preparation tools, Interview Coder is meant to be used during the interview itself. It claims to stay invisible on screen share and in system monitors so that interviewers do not see it running.

The tool can provide:

Real-time coding help - instant code suggestions for algorithm and implementation tasks.

Debugging support - assistance in finding and fixing errors while coding.

Contextual explanations - reasoning or notes that help shape answers to questions.

Audio and text input support - in newer versions, it can use interviewer audio to understand and respond to questions.

Because it runs alongside standard interview tools like Zoom, Google Meet, and others, it is difficult for interviewers to notice unless they know exactly what to look for.

Why Interview Coder Breaks Traditional Coding Interviews

Traditional coding interviews are built on one basic idea: the candidate is solving the problem on their own. Interview Coder breaks that idea.

When a candidate uses Interview Coder, the interview no longer measures how they think. It measures how well they can read, type, and repeat AI-generated output. The interviewer sees working code, but the reasoning behind it may not belong to the candidate. This is the same tension teams face when they move toward open-book, AI-aware technical interviews.

Here’s how this causes the interview to fail:

Problem Decomposition Is No Longer Observed

Interviewers expect candidates to clarify requirements, identify constraints, and break problems into steps. Interview Coder performs this decomposition automatically. The interviewer sees a clean solution path, but never observes how the candidate arrived there.

Algorithm and Data Structure Choices Become Unreliable Signals

Selecting the right algorithm or data structure is a key indicator of skill. Interview Coder can consistently suggest optimal or near-optimal approaches. Candidates can implement these choices without understanding time complexity, space trade-offs, or why alternatives were rejected.

Follow-Ups Stop Testing Real-Time Thinking

Interviewers rely on follow-ups like changing constraints, adding edge cases, or modifying requirements. Interview Coder adapts instantly, allowing candidates to respond without doing the underlying reasoning. Adaptability appears high, even when it is not.

Implementation Quality Is Artificially Inflated

Clean syntax, correct edge-case handling, and efficient code are often treated as proof of competence. Interview Coder can generate production-quality solutions, masking gaps in debugging ability, error handling, and system thinking.

Timing and Fluency Signals Are Corrupted

Interviewers often infer confidence and competence from pacing and speed. With Interview Coder, response timing reflects AI latency, not human thought. This makes traditional performance cues meaningless.

Without detection or prevention, hiring teams risk selecting candidates who perform well in interviews but struggle once they have to work without live AI help, especially as AI interview fraud tactics become more sophisticated.

Detection Signals Specific to Live Coding Tools

Live coding assistance tools like Interview Coder do not just help candidates write code. They introduce a second, invisible problem solver into the interview. This creates observable distortions in how solutions are formed, explained, and adapted.

The key to detection is not spotting a single mistake. It is recognizing breaks in continuity between thinking, coding, and explanation.

1. Broken Problem-Solving Narrative

Strong candidates build solutions incrementally. They explore, discard ideas, and refine their approach. Live coding tools collapse this process.

What this looks like:

The candidate skips problem exploration and moves straight to an advanced approach

No discussion of alternative solutions or trade-offs

The solution appears fully formed early in the interview

Why this matters: Real engineers reveal uncertainty before clarity. AI-assisted candidates present certainty without a visible path to it.

2. Disconnected Algorithm Justification

Interviewers often ask why a particular algorithm or data structure was chosen. With live AI assistance, these choices are externally generated.

Signals to watch for:

Vague explanations like “this is the optimal approach”

Inability to compare with simpler alternatives

Memorized complexity statements without real reasoning

Why this matters: Understanding shows up in comparisons. AI-assisted answers tend to be declarative, not analytical.

3. Latency Patterns That Do Not Match Thinking

Human reasoning produces uneven pacing. Live coding tools introduce artificial timing.

Common patterns:

Long silent pauses followed by rapid, confident implementation

Delays specifically after follow-up questions or constraint changes

Code appearing faster than verbal reasoning

Why this matters: Thinking happens before typing. When typing consistently leads thinking, something is off.

4. Shallow Debugging Behavior

Debugging forces candidates to simulate code execution mentally. This is hard to fake.

Red flags:

Difficulty tracing code with simple inputs

Reliance on rewriting instead of inspecting logic

Avoidance of stepping through edge cases

Why this matters: If a candidate did not construct the logic, they cannot easily debug it.

5. Fragility Under Interviewer Pressure

Live coding tools handle static problems well. They struggle with interactive probing.

Watch for:

Performance drops when asked “why” instead of “how”

Confusion when requirements are slightly reframed

Overcorrection when minor changes are introduced

Why this matters: Real understanding adapts smoothly. Assisted reasoning often resets.

6. Over-Polished Code With No Personal Signature

AI-generated code has a certain cleanliness that lacks personal style.

Patterns include:

Consistent formatting and naming regardless of candidate background

Advanced constructs used without explanation

No personal shortcuts, comments, or heuristics

Why this matters: Engineers develop habits. AI does not.

7. Reasoning Drift Across the Interview

As interviews progress, genuine candidates build a coherent mental model. Assisted candidates often drift.

Signs of drift:

Inconsistent terminology for the same concept

Changing explanations for earlier decisions

Difficulty referencing earlier parts of the solution

Why this matters: Ownership creates continuity. AI assistance breaks it.

The Core Insight for Hiring Teams

Live coding tools do not make candidates perfect. They make them inconsistent.

The inconsistency shows up between:

Code and explanation

Speed and understanding

Confidence and adaptability

Teams that only score correctness will miss these signals. Teams that evaluate reasoning continuity will not.

This is the difference between detecting live AI assistance and being fooled by it.

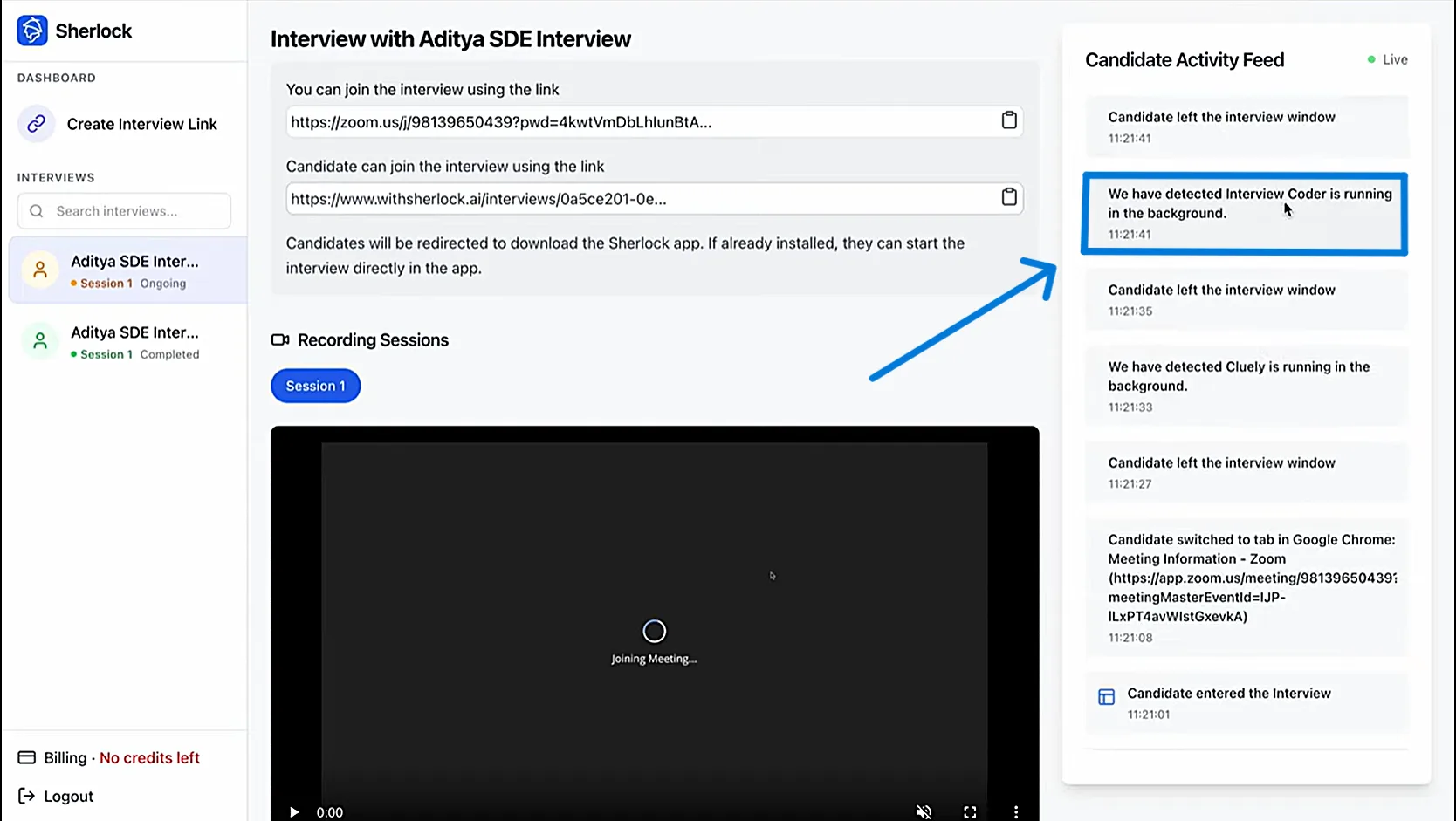

How Sherlock AI Detects Interview Coder Cheating

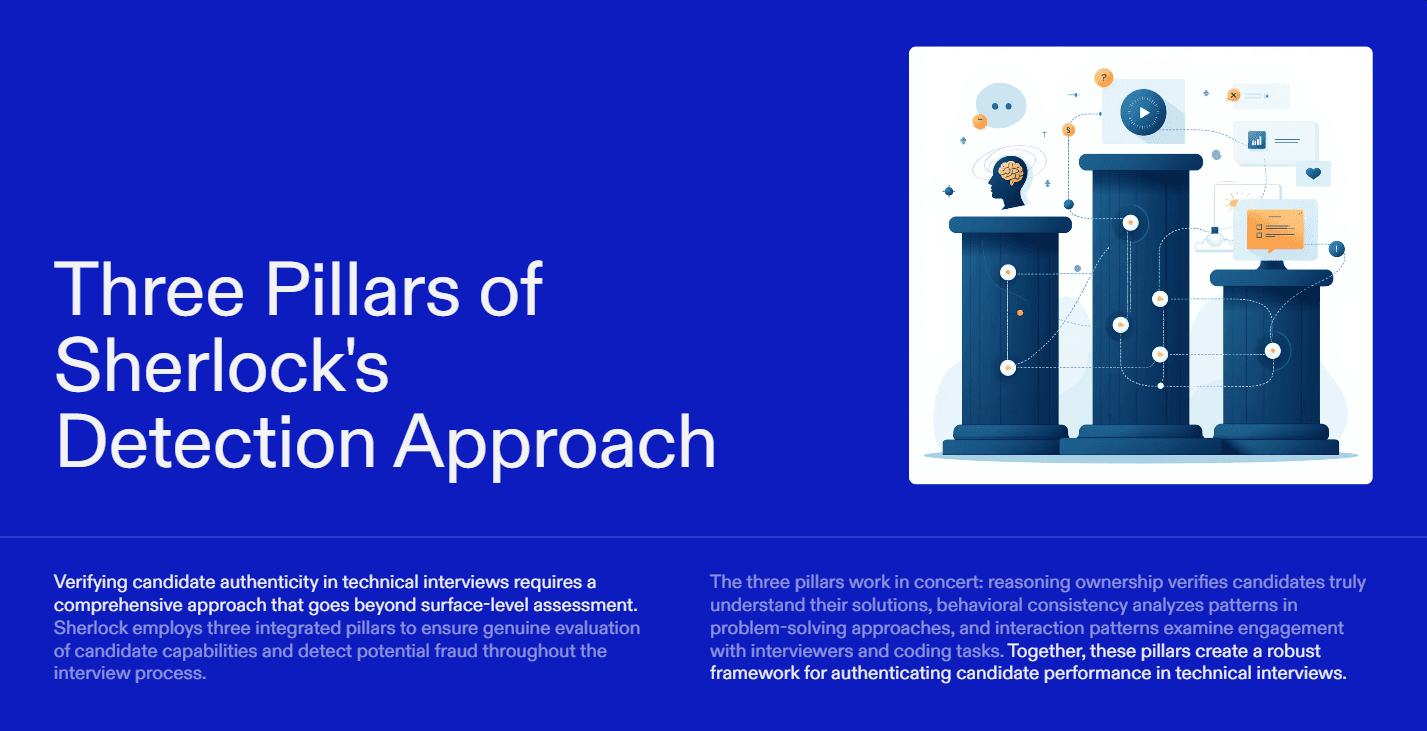

Interview Coder works by inserting an invisible, external problem solver into a live coding interview. Sherlock AI detects this by identifying breaks between how humans naturally think, code, and explain decisions, and how AI-assisted behavior actually appears.

Sherlock AI does not rely on single red flags or superficial checks. It looks for consistent behavioral and reasoning anomalies that emerge when live coding tools are in use.

1. Reasoning Continuity Analysis

Sherlock AI tracks whether a candidate’s reasoning remains consistent throughout the interview.

What it analyzes:

How the candidate introduces a solution

How they justify algorithm and data structure choices

How explanations evolve during follow-ups

Why this works: AI can generate correct answers, but it cannot maintain personal reasoning continuity across dynamic questioning.

2. Latency and Interaction Pattern Detection

Sherlock AI observes timing patterns that indicate off-screen assistance.

Signals include:

Delayed responses after problem changes

Pauses that precede large jumps in code quality or completeness

Mismatch between verbal thinking and coding speed

Why this works: Human hesitation correlates with reasoning effort. AI-assisted hesitation correlates with response generation.

3. Coding-to-Explanation Alignment Checks

Sherlock AI evaluates whether the candidate can accurately explain the code they write.

It looks for:

Shallow or circular explanations

Difficulty walking through execution paths

Inability to predict behavior for specific inputs

Why this works: You can type AI-generated code. You cannot easily explain reasoning you never performed.

4. Adaptability Stress Signals

Sherlock AI monitors how candidates respond when the interview flow changes.

It analyzes:

Reactions to modified constraints

Performance during debugging requests

Behavior when asked to simplify or refactor

Why this works: Real engineers adapt locally. AI-assisted candidates often reset globally.

5. Cross-Interview Behavioral Consistency

Sherlock AI compares behavior across stages of the hiring process.

It checks for:

Consistency between assessments and live interviews

Stability in coding style, explanation depth, and pacing

Sudden performance spikes during monitored interviews

Why this works: Cheating introduces variance. Real skill is consistent.

6. Pattern-Based Detection, Not Accusations

Sherlock AI does not label a candidate based on a single signal. It aggregates multiple indicators into a confidence-based assessment.

This allows teams to:

Adjust follow-up questions in real time

Flag interviews for deeper review

Defend hiring decisions with objective evidence

Those same signals also power structured interview notes that help panels stay aligned and defend decisions later.

Why this works: Live coding tools can evade simple rules. They cannot evade sustained pattern analysis.

The Key Advantage

Interview Coder tries to stay invisible. Sherlock AI looks beyond visibility.

By focusing on reasoning ownership, behavioral consistency, and interaction patterns, Sherlock AI enables hiring teams to detect Interview Coder cheating reliably, without guessing or disrupting the interview flow.

Protecting Interview Integrity in a Live-AI World

Live coding tools like Interview Coder are already reshaping technical interviews. When external reasoning enters the interview unnoticed, correct code stops being a reliable signal of real skill.

The solution is not stricter rules or more complex questions. It is focusing on signals that cannot be outsourced: reasoning ownership, consistency across interactions, and the ability to adapt under pressure.

Sherlock AI surfaces these signals by detecting breaks in behavior, timing, and explanation that live coding tools introduce. This allows teams to make fair, defensible hiring decisions without disrupting the interview flow.

As live AI becomes more common, interview integrity becomes a competitive advantage. Teams that invest in detection now will continue to hire engineers who can actually solve problems, not just present solutions.