Back to all blogs

AI interview fraud is exploding in 2026. From deepfakes to proxy candidates, see the real risks for recruiters and how to safeguard your hiring process.

Abhishek Kaushik

Nov 17, 2025

According to the Federal Trade Commission (FTC), job scams are now among the fastest-growing fraud typologies, with losses skyrocketing from $90 million in 2020 to over $501 million in 2024.

In 2026, hiring managers and security experts sounded the alarm on a new kind of hiring fraud: candidates using advanced AI to cheat in job interviews. What began as a trickle of isolated incidents has become a flood of deepfake interviews, proxy interviews, and AI-assisted cheating that is undermining the integrity of remote hiring.

CBS News study revealed that fully 50% of businesses had encountered AI-driven deepfake fraud in some form. By mid-2025, Wall Street Journal reported - even corporate giants like Google and McKinsey were responding - reintroducing mandatory in-person interviews to counter the surge in AI interview fraud.

The message is clear: Interview fraud, turbocharged by AI, is now a mainstream concern.

Several converging trends made 2025 a tipping point. First, the pandemic-era normalization of Zoom and Teams interviews created a fertile ground for impersonation scams.

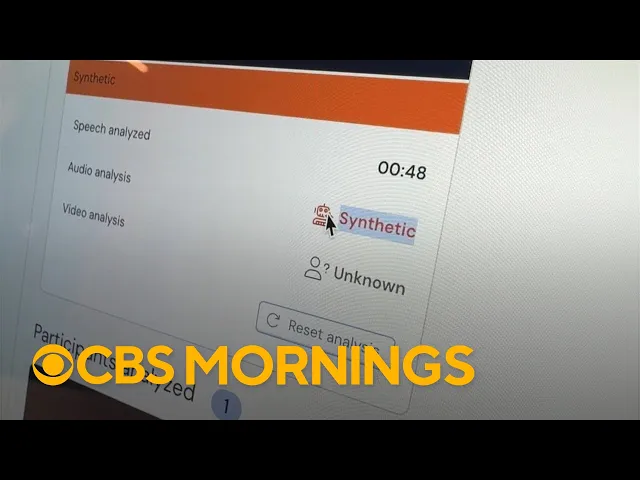

Second, the explosion of generative AI (from voice-cloning tools to ChatGPT-like assistants) handed fraudsters powerful new weapons. In the past, a brazen impostor might simply hope to pass as someone else; now they can deploy real-time video deepfakes and voice clones that are scarily convincing - see this video on CBS Morning recently.

CBS Morning discussing this grave problem recently in 2026

2026: Candidate using an AI deepfake tool to appear real and fraud during an interview

Social media and underground forums are awash with stories - some real, some exaggerated - of candidates secretly using AI to land jobs.

As one viral TikTok video’s caption put it: “Interviews are NOT real anymore”.

WeCP, an AI Assessment and Interviewing platform published State of Cheating in Online Interviews and released a dedicated resource with proof-videos of how candidates cheat.

Hiring teams are finding that what they see (and hear) in a virtual interview may not be what they get.

High Stakes for Employers

This is not just an HR headache; it’s a serious security and economic risk. Companies have unwittingly hired imposters who turned out to be cybercriminals or unqualified stand-ins - with disastrous consequences.

In one high-profile case, a North Korean hacker used a stolen identity and AI-generated visuals to get hired at a U.S. tech firm, then attempted to install malware on the company network.

Financial institutions, defense contractors, IT and BPO firms are all on alert, recognizing that a fraudulent hire in a sensitive role could lead to a breach of customer data, theft of intellectual property, or other dire outcomes. The economic fallout of hiring fraud is mounting:

Survey says 23% of surveyed companies reported losing over $50,000 in the past year due to bogus candidates, with 10% losing over $100,000. Reputational damage and regulatory compliance issues add further pain.

Little wonder that “interview integrity” and “AI fraud detection” have become boardroom-level priorities in 2026.

Our team at Sherlock AI researched for over months before writing this comprehensive blog. Today, we’ll explore the evolution of interview fraud from its humble beginnings to the sophisticated AI-driven schemes of today.

We'll examine current trends (with real-world examples and statistics), dissect the various forms of AI-powered interview cheating, and explain why it matters so much.

We’ll also look ahead at the next 5–10 years: the arms race of AI vs. AI as companies deploy countermeasures, the emergence of new threats like hologram or AR-based impersonations, and how laws and best practices are likely to evolve.

Finally, we’ll provide actionable best practices for Founders, HR and TA leaders to protect their organizations. This is the definitive guide to AI interview fraud in 2026 and beyond - a one-stop resource for understanding and combating this emerging menace.

Historical Context: From Proxies to Deepfakes

Old Tricks, New Tech:

Interview fraud isn’t entirely new - it’s the scale and tech that have changed. In past decades, there were instances of “proxy interviews” in which a candidate would have someone else pose as them for a phone screen or even an in-person interview.

These manual impersonation schemes were relatively rare and risky, often relying on lookalike stand-ins or simple deception. Recruiters (especially in tech) have been aware of such proxy hiring scams for years. By 2020, as video interviews became ubiquitous, reports of “fake Skype interviews” and stand-ins were already rising.

Candidates treated virtual interviews like open-book exams - keeping cheat sheets on-screen or even whispering answers fed by a friend off-camera. Some went further, lip-syncing while a more qualified person provided responses in the background.

An underground market emerged: proxy interview “freelancers” for hire, ready to impersonate applicants for a fee.

In short, the concept of interview fraud predates the AI boom, rooted in the age-old temptation to cheat one’s way into a job.

The Pandemic and Remote Work Boom:

The shift to remote hiring during COVID-19 poured fuel on the fire. With employers no longer meeting candidates face-to-face, the opportunity for impersonation and digital deception skyrocketed. By mid-2020, studies noted a 67% spike in video interviews and a parallel rise in fake candidates attempting to game the system. Employers found that video calls, once thought foolproof for verifying identity, could be gamed.

A notable scam involved candidates using covert earbuds or chat windows to get real-time coaching during interviews. Recruiters grew suspicious of perfectly fluent answers from candidates whose resumes didn’t back them up - sometimes discovering a hidden helper was feeding answers.

For example, it became known that some technical candidates would invite a subject-matter expert to sit just off camera or even control their screen remotely, turning a supposedly solo interview into a scripted performance.

Read More: Sherlock AI (Anti-Interview-Fraud) Security Overview

The Deepfake Era Dawns:

The emergence of deepfake technology around 2018–2020 (initially used to create face-swapped videos and synthetic voice clips) added a troubling new dimension. Early on, deepfakes were mostly seen in celebrity face-swaps and misinformation campaigns.

But by 2021–2022, forward-looking security experts warned that deepfakes could infiltrate hiring. Those warnings proved prescient. In June 2022, the FBI’s Internet Crime Complaint Center issued a public alert about a spike in complaints involving deepfaked video and audio being used in remote job interviews.

The FBI noted cases where an applicant's video feed didn't quite sync with the audio - lips not matching speech, or odd lapses when sneezing or coughing - clues that a synthetic video persona was in play. In these schemes, fraudsters also used stolen personal data to pass background checks, essentially creating full fake identities to apply for jobs.

The targets were often roles with access to sensitive customer information, financial data, or IT systems. In other words, deepfake interview fraud had arrived, motivated not just by individuals seeking employment under false pretenses, but by organized actors seeking to infiltrate companies.

Rising Awareness (2023–2024):

By 2023, scattered reports of AI-assisted interview cheating were turning into a flurry of headlines. Recruiters began sharing bizarre anecdotes: candidates who spoke fluent technical jargon on Zoom but couldn’t string a coherent sentence on Day 1 of the job, or video interviews where the "person" on camera glitched oddly.

The U.S. Department of Justice unmasked a ring of North Korean IT workers who had posed as remote developers at U.S. companies, in some cases using doctored profiles and deepfake tricks, funneling their salaries back to the North Korean regime.

In India, a market with a huge IT and BPO workforce, major firms started cracking down on proxy interview fraud, handing out lifetime bans to candidates caught using stand-ins. One sensational incident in 2024 involved a man who landed a job at Infosys (an IT giant) by having a friend impersonate him during the video interview.

Within two weeks of starting, his poor on-the-job performance gave him away - he was swiftly fired and even faced criminal charges for impersonation.

News of such cases spread rapidly on professional networks and social media, making companies everywhere ask: How many of our recent hires are who they say they are?

By the start of 2026, “proxy interviews, AI cheating & deepfakes” were no longer fringe phenomena; they had the full attention of HR and security departments worldwide.

This historical context from low-tech impersonation to high-tech AI fakes set the stage for the explosive growth of AI-driven interview fraud in 2026.

Current Trends in 2026: A Fraud Arms Race

As we hit 2026, interview fraud has evolved into a high-tech cat-and-mouse game. Here are the dominant trends defining this year’s landscape:

Real-Time Deepfake Video & Voice:

Advances in AI now allow real-time face and voice cloning during live interviews. What used to require Hollywood-level effects can now be done with off-the-shelf tools. In June 2025, security firm Pindrop demonstrated this on live TV: they “transformed a reporter’s face in real time on a Zoom call” and created a voice clone that could engage in unscripted conversation.

Market is now flooded with deepfake voice tools that lets you clone your voice with AI

Fraudsters are using such tools to appear as someone else – for example, overlaying a stolen photo or a generated avatar onto their video feed, combined with an AI voice that matches the claimed identity. This means a scammer can sit behind a screen and have an AI-generated “digital puppet” attend the interview in their place.

By 2026, these deepfake avatars have become alarmingly convincing. They blink, smile, and nod at all the right times. Only subtle glitches (like a slight lip-sync delay or unnatural eye movements) might give them away. For audio, AI voice models trained on a few minutes of someone’s speech can mimic tone and accent eerily well.

The result: companies can no longer trust that the face and voice on a video call belong to a real, flesh-and-blood candidate.

ChatGPT as the Invisible Co-Pilot:

Another 2026 trend is candidates using AI assistants live during interviews. Generative AI models like GPT-4 have become the ultimate cheat sheet. Rather than memorizing answers, tech-savvy applicants now have AI “whispering” responses in real time. This can take many forms.

One popular method is using a speech-to-text and text-to-speech pipeline: as the interviewer asks a question on Zoom, the candidate’s computer runs a transcription, feeds it to a large language model (LLM) which generates a polished answer, and then either displays that answer for the candidate to recite or even vocalizes it in a subtle earpiece.

A candidate unethically used a hidden overlay to receive answers from ChatGPT during a live coding interview

Essentially, the candidate has a real-time teleprompter. In one viral TikTok example, a young woman openly showed how she used a smartphone app during a live video interview – the phone was propped up against her laptop, providing AI-generated responses she could read out loud.

Interview fraud by candidate - uses a mobile phone listening to ChatGPT kept on Laptop while still maintaining accurate eye contact.

“Well.. I love the problem solving aspect of programming,” he says, reading from the phone, while pretending to be formulating the answer himself. If he hesitated, the AI would even fill in with natural-sounding ums and pauses.

Another TikTok, with over 5 million views, showed an interviewer getting suspicious and asking the candidate to share her screen – the candidate complied, hiding the AI app, then resumed the charade once the check was over.

These anecdotes illustrate a broader arms race: job seekers are arming themselves with AI tools to tackle tricky technical and behavioral questions.

By spring 2026, reports surfaced of applicants using coding copilots during programming tests and GPT-based agents to handle case study prompts.

This trend has led some employers to joke that interviews have become “bot vs. bot” - the company’s AI-driven hiring bots filtering candidates, the candidates’ AI helping them fool the bots and the human interviewers.

Fraud-as-a-Service Marketplaces:

Perhaps most worrisome is how organized and commercialized interview fraud has become by 2026. It’s not just isolated individuals hacking together solutions; there is now a booming underground market for interview cheating.

On Telegram, WhatsApp, and Facebook groups, thousands of members are trading tips or offering services. One thriving niche is proxy interview services - entire agencies (often operating from countries with large IT labor forces) that will take interviews on a client’s behalf for a fee.

Some proxy agents even guarantee job placement, advertising a network of experts in various domains (cloud computing, software development, data science, etc.) who can impersonate clients with domain-specific knowledge.

These groups discuss tools openly; references to “Otter” (the transcription app) are common, as it’s used to feed questions and answers in real time.

In one Facebook group, a desperate job hunter posted:

“Any proxy available on AWS DevOps? Tomorrow is interview. Need ‘Otter’ type proxy.”

Within such communities, this request is nothing extraordinary - just another day in the fraud-as-a-service economy.

Beyond live proxies, there are vendors selling whole packages of fake identity documents, leaked interview questions, and even “shadow stand-in” arrangements where someone secretly performs your work after you’re hired.

Law enforcement and cybersecurity firms note that organized crime has latched onto this trend. Synthetic identity kits (bundling stolen personal data with AI-generated IDs) are sold on the dark web to help impostors bypass background checks.

This commoditization means even candidates with limited technical skill can purchase deepfake videos or proxy assistance - effectively, fraud on-demand.

Rising Incidents and Statistics:

Hard numbers are still emerging, but surveys in 2026 confirm that this is not a minor issue. In a Gartner survey of 3,000 job seekers, 6% admitted to engaging in interview fraud - either by having someone else impersonate them or by impersonating someone. That's likely the tip of the iceberg, given not everyone would confess to cheating.

On the employer side, the figures are even more startling. A survey of hiring managers found 59% suspect candidates of using AI tools to misrepresent themselves during the hiring process. One in three managers said they discovered a candidate was using a fake identity or proxy in an interview.

And 62% of hiring professionals admitted job seekers are now better at faking with AI than recruiters are at detecting it

Another study in mid-2025 found 17% of HR managers had directly encountered deepfake technology in a video interview. Sectors like tech and finance report the highest rates, but no industry is immune.

Cybersecurity firms hiring remote analysts, banks recruiting IT developers, universities hiring online faculty - all have reported imposters. This trend is forcing companies to take proactive steps (like requiring at least one on-site interview, or beefing up verification), as we’ll discuss in the detection section.

Targeted Sectors (BFSI, IT, BPO, etc.):

While all industries see some fraud, it’s clear that certain sectors are bearing the brunt in 2026. The Banking, Financial Services & Insurance (BFSI) sector faces frequent attempts, given the lucrative access a rogue hire might gain (to money systems, personal data, or trading platforms).

Security reports note that cryptocurrency and fintech companies saw a surge in fake applicants attempting to land remote jobs. Often these impostors aim to get inside for fraud or data theft. The broad IT industry is another hotspot - especially roles like software engineering, data analysis, and technical support that can be done remotely.

Many large IT services firms in India and beyond have struggled with waves of proxy interview cases, as seen in the Infosys example and others.

A hiring manager at a U.S. tech company said this 👇

The entire first 30 minutes of some interviews now feel like verifying the person’s identity, then we get to their skills

BPO (Business Process Outsourcing) and call centers also report higher-than-average fraud attempts, likely because these jobs are often entry-level, high-volume hires done through virtual processes. Additionally, BPO roles may have access to customer data, which is a goldmine for fraudsters.

Even more alarming, critical infrastructure and public sector jobs have seen incidents: a 2025 research report uncovered fake candidates applying in schools, healthcare organizations, and government contractors, where an unqualified or malicious insider could directly impact safety or security.

Such cases raise the stakes from “cheating to get a job” to potentially jeopardizing lives or national security. The common thread is clear: wherever there is remote hiring combined with valuable access or high demand for talent, AI-enabled fraudsters are probing for weaknesses.

This arms race in 2025 between deceptive candidates and wary employers is redefining how companies approach hiring. Next, we’ll break down the forms of AI interview fraud in more detail - from deepfake videos to covert coaching tools - to understand exactly how these scams work.

Forms of AI-Powered Interview Fraud

Not all interview fraud is the same. Here we categorize the most prevalent forms of AI-driven cheating observed in hiring processes circa 2026, with an explanation and real-world examples for each:

Deepfake Video Impersonation:

This is the most visually dramatic form. A fraudster uses deepfake software to superimpose a different face onto their live video feed (or even to animate a completely synthetic face). For instance check this video that went viral on LinkedIn👇

Use of deepfake to create a fake appearance while the voice was of a proxy candidate

👉 Read more: 6 Deepfake Tools Fraudsters Are Using

During a Zoom or Teams interview, the interviewer sees what appears to be a real person’s face, but it’s actually an AI-generated mask. Advanced methods also alter head movements and expressions in real time to align with the impostor’s voice. In those sessions, the “candidate” on camera was just an avatar puppeteered by someone else.

Another case involved an attacker who lifted a legitimate professional’s photo from LinkedIn and used it to create a real-time talking head, fooling interviewers through multiple rounds. Deepfake video frauds are especially used to bypass visual identity verifications - e.g. if a company requires a video interview to compare to the photo on an ID, the fraudster shows an AI-crafted face matching the ID.

Why it’s hard to catch?

These deepfakes can be nearly pixel-perfect; a busy recruiter might not notice slight anomalies in real time. However, red flags include unnatural facial movements or expressions (AI faces may blink at odd intervals, or the smile might not reach the eyes) and lip-sync issues if network lag or software glitches occur

AI Voice Cloning and Voiceover Fraud:

In this scenario, the impostor may actually show their real face or use a static picture, but they won’t use their real voice. Instead, they leverage AI-generated speech. Given a sample of a target voice (or just a generic “professional” voice), modern AI can produce live speech that sounds human. The person can type or whisper responses, and a synthesized voice speaks for them on the call. We’ve also seen candidates who play pre-recorded clips of someone else speaking.

The FBI noted “voice spoofing or potentially voice deepfakes” being used in interviews – where the lip movements seen didn’t match the audio. Voice fraud is also common in phone interviews (no video). For example, a non-native speaker might use an AI voice clone with a fluent accent to answer questions, or a male candidate might use a female voice clone if the stolen identity is female.

Why it’s hard to catch: Audio-only, this can be very effective; interviewers have little to authenticate against. Signs of trouble include tiny audio artifacts (robotic timbre or odd intonations) and delays between question and answer (if the person is waiting for the AI to generate speech).

Some companies have started employing voice biometric checks (like asking the candidate to repeat a specific phrase) to detect if the voice stays consistent.

Proxy Interviews (Human Stand-ins with AI Aid):

This is a hybrid fraud: another person (a proxy) pretends to be the candidate in the interview, often with the candidate’s knowledge and coordination. Proxy interviews have been around, but AI has supercharged them. Now a proxy can use tools like real-time transcription and ChatGPT to perform even better.

A vivid example: professional proxy interviewers have been using Otter’s live transcript feature to get the interviewer’s questions in text and simultaneously feeding them into AI to formulate ideal answers. The proxy then delivers those answers verbally.

Essentially the proxy themself might be getting AI help! Business Insider documented how some proxy interview service providers specifically advertise “Otter-enabled” interviews, where a back-end team will supply answers on the fly via a shared Google Doc or messaging app.

In other cases, the proxy is just a highly qualified person in the field who does the interview without AI, then if “hired,” that person vanishes and the real (unqualified) candidate shows up to work – a classic bait-and-switch.

AI tools also help here by enabling screen-sharing tricks: proxies sometimes remote-control the candidate’s computer or feed them answers through a second screen. For instance, a candidate might openly join the Zoom call (to show their face for ID), but have the proxy remotely solving coding questions in a shared editor or whispering the answers.

Detection signs: Proxies can be caught if there’s a follow-up verification (like comparing the person in a technical interview to the person in HR onboarding via video). Sometimes, as with the Infosys case, the simplest give-away is performance inconsistency – the person who shows up to work can’t replicate the skills shown in the interview.

Technically, suspicious signs during the interview include audio delays (if lip-syncing) and requests to turn off the camera or audio “due to technical issues” which proxies use to hide their coordination.

Recruiters are also starting to ask spontaneous personal questions (“Tell me about a project on your resume”) to see if the interviewee stumbles – a proxy might not know personal details. But with enough preparation and tech, proxies remain a serious challenge.

Covert AI Assistance (“Whispering AI”):

Unlike a full proxy where another human is impersonating, in this method the actual candidate is present but secretly relying on AI for answers. We touched on this above with ChatGPT co-pilots; it’s worth detailing the tactics.

One popular tactic in 2025 is using mobile apps or browser extensions that act as an AI “whisperer.” For example, apps like FinalRound AI or Cluely provide an overlay on the screen that listens to the interviewer and generates suggested answers in real time.

Candidates keep these suggestions discreetly visible – perhaps on a second monitor, or by using transparent glasses that display text (an emerging tech). More low-tech, some just use a phone (placed next to the webcam) scrolling through notes or AI-generated talking points.

How candidates cheat in technical coding interviews using hidden overlays

The Atlantic highlighted a case where a candidate literally read AI-written answers off her phone during a live video interview. Another variant is the use of covert earbuds: small flesh-colored earphones that a candidate wears. Off-camera, they have a second device (or a friend on mute) feeding them answers.

The AI (or accomplice) listens to the interview via the meeting software or a secondary call and then speaks the ideal answers into the candidate’s ear. The candidate merely parrots what they hear. Tools like Microsoft’s real-time speech translation earbuds can be repurposed for this kind of cheating – essentially doing real-time Q&A translation from interviewer to ChatGPT and back to the candidate’s ear.

Detection signs: An interviewee who appears to be strangely detached (eye line constantly shifting to read something, awkward pauses before answering while they “listen” to something) can raise suspicions. In one TikTok video, the hiring manager caught on because the candidate kept glancing down and was monotoning perfect answers as if reciting. When confronted to share screen or repeat the answer without looking, the candidate fumbled.

Additionally, technical solutions like requesting the candidate to do a 360° pan of their room at the start (to ensure no visible devices or people aiding) are being tried – though they won’t catch an earbud or a cleverly placed phone.

This category of fraud is particularly insidious because the person on camera is real (not a deepfake), and they may well be the same person who shows up for the job – but they might have grossly exaggerated their knowledge/ability by leaning on AI.

Multi-Device & Multi-Window Cheating:

Even when not using advanced AI, many candidates exploit the remote setup by using multiple screens or devices to find answers. Think of it as having an open-book exam. A candidate might have Google or Stack Overflow open on a second monitor, or be furtively typing the interviewer’s questions into ChatGPT on their phone (if not using the fully automated pipeline described earlier).

In coding interviews, some attempt to run code in an IDE on another screen or seek solutions online while stalling for time. This overlaps with general cheating, but AI makes it far easier – a candidate can copy-paste a technical question into an AI and get a structured answer almost instantly.

Telltale signs: Quick, copy-and-paste sounding answers full of detail (more than the candidate’s normal speech) can be one. Interviewers sometimes hear keyboard tapping while they are asking a question – indicating the candidate is likely searching for it. To counter this, some tech firms now ask candidates to share their entire screen during virtual interviews, or use specialized proctoring software that flags if a candidate clicks away from the interview window.

However, dedicated cheaters use workarounds: for example, multiple monitors (which screen-sharing might not capture), or a separate laptop next to them out of the web camera’s view. One student famously wrote software to overlay answers on his screen (visible only to him, not via screen share) to beat a major tech company’s interview – essentially a personal AR HUD for answers.

This kind of “DIY” cheating rig is likely to proliferate as tech-savvy grads try to outsmart hiring filters.

Document Forgery & Credential Fraud (AI-Generated Credentials):

While not part of the live interview per se, it’s worth noting that AI is also helping candidates fabricate resumes, portfolios, and even entire work identities. Natural language generation is used to create polished resumes full of keywords (some candidates generate multiple personalized resumes for each application.

More concerning, AI image generators can produce fake diplomas, certificates, or work samples. There are cases of candidates submitting AI-created project screenshots or coding portfolios that they cannot reproduce in a technical test.

Companies have flagged document falsification with AI as a persistent threat – from “Photoshopped” diplomas to deepfake reference letters. During interviews, this manifests when a candidate backs up claims with bogus documentation or links.

A portfolio might include artwork or code snippets that were actually made by an AI model like DALL-E or Copilot, not by the candidate – essentially plagiarism via AI. If an interviewer asks detailed questions about a portfolio piece and the candidate is evasive or vague, it could indicate the work isn’t truly theirs.

Each of these forms of fraud – whether it’s a full deepfake avatar or just an AI whispering assistant – undermines the fundamental premise of hiring: that you’re evaluating the genuine qualities and knowledge of a real person.

They range from blatant identity fraud to subtle cheating, but all create mismatches between who the employer thinks they hired and who they actually get.

Why It Matters: Risks and Impact of AI Interview Fraud

Hiring fraud isn’t just a harmless fib; it carries significant consequences for organizations and even broader society. Here are the key reasons why AI-driven interview fraud matters – economically, operationally, and ethically:

Economic Impact of Bad Hires:

A fraudulent hire can cost a company dearly. The direct costs include recruitment expenses, salary paid to an unqualified person, and the cost of replacing them. But beyond that, think of the productivity loss and opportunity cost. If a position stays effectively unfilled (because the person hired can’t actually do the work), projects get delayed and teams are strained.

One survey found 70% of managers believe hiring fraud is an underestimated financial risk that company leadership needs to pay more attention to. The same study reported that 23% of companies had lost over $50K in the past year due to hiring or identity fraud, through factors like project delays, training investments lost, or severance costs.

In industries like staffing and consulting, the damage can include refunds to clients or penalties if a placed candidate is discovered to be fake. An investigation can cost $15–25K, legal fees another $5–10K, and lost team productivity during disruption can be a 20–30% hit to output.

Multiply this by multiple incidents, and the losses mount quickly. In short, hiring a fraud is like a slow-motion breach of contract – you pay for talent you didn’t actually get.

Security and Data Breach Risks:

Perhaps the most chilling risk is when fraud isn’t just about an unqualified person getting a job, but a malicious actor infiltrating a company. Economic motives (steady paycheck) aside, some impostors have far more nefarious goals: stealing data, funds, or trade secrets, or installing malware.

The 2024 KnowBe4 incident is a prime example – a purported software engineer, hired through a deepfake identity, immediately attempted to inject malware into the company’s systems. In that case, the individual was traced back to a North Korean hacking operation, and their goal was likely espionage or ransom, not the job itself.

Government officials warn that nation-state cyber groups are exploiting remote work job openings as a new attack vector. If a threat actor can successfully pose as an employee, they gain insider access that can bypass many security perimeters.

Even a less sophisticated fraudster might see employment as an opportunity to embezzle (e.g., a fake accounts payable clerk could reroute payments).

The insider threat angle is huge – and AI makes it easier for bad guys to become insiders under false pretenses.

Consider financial institutions: a fake IT admin in a bank could quietly create backdoors or siphon off data on transactions. Or consider healthcare: a fake data analyst in a hospital might access patient records or research data for misuse.

The FBI’s 2022 warning explicitly noted that some deepfake hires had access to customer PII, financial data, and corporate databases – a nightmare scenario if that data is misused. Beyond theft, there’s the risk of sabotage by someone unvetted.

If critical infrastructure firms (power grids, telecom, etc.) were to hire a deepfake infiltrator, the result could be service disruptions or worse. That’s why this issue has drawn concern from not just HR teams but also cybersecurity and even national security agencies.

Performance and Team Productivity Issues:

In cases where the fraudster isn’t a spy but simply an underqualified person who cheated to get in, companies face a different problem: the incompetent employee. Teams quickly notice when someone can’t do what they claimed in the interview. This can lead to project failures or extra workload on colleagues who must cover for the underperformer.

For example, an IT services company might place a developer at a client site only to find they can’t code the simplest tasks – embarrassing for the firm and costly if service level agreements are missed. In the Infosys proxy case, the individual’s poor communication skills immediately raised red flags since he had somehow spoken fluent English in the interview. Co-workers had to pick up the slack in the meantime.

Team morale can suffer; colleagues feel duped or burdened, and trust within the team erodes when such deception comes to light. Moreover, managers must spend time coaching or managing out the person, instead of advancing the team’s real goals.

It’s a lose-lose situation: the fraudulent hire often ends up fired (now with a tainted record), and the company has wasted time and money.

Reputational Damage:

Trust and reputation are on the line for companies falling victim to these scams. If word gets out (through media or industry grapevines) that your company hired a fake, it can be embarrassing and damaging to the brand.

Clients may lose confidence – for instance, if a bank accidentally hires a fake employee who could have accessed client accounts, big customers will rightly ask, “What are your vetting practices? How did you let this slip through?”

In sectors like consulting or outsourcing, a client might terminate a contract if they discover the personnel provided were not who they purported to be.

There’s also PR fallout: news stories about “XYZ Corp was duped by a deepfake candidate” make for viral headlines. Start-ups especially fear this, as they vie for investor confidence; a fraud incident might raise doubts about governance and oversight.

Additionally, if an impostor causes harm (say, a fake engineer’s error causes an outage or a security breach), that failure reflects on the company’s competence.

Employer branding suffers too – future job seekers might be wary of a company known for lax hiring security, and current employees could feel ashamed or uneasy. In short, interview fraud doesn’t just hit the balance sheet; it strikes at a company’s credibility.

For HR leaders, preventing it is as much about maintaining the organization’s integrity as it is about avoiding tangible losses.

Legal and Compliance Risks:

Hiring fraud can also put companies in murky legal territory. If a fraudulent hire causes damage (financial, cybersecurity, etc.), stakeholders or regulators might question the company’s diligence. In regulated industries (finance, healthcare, government contracting), there are often compliance requirements for employee background screening.

For example, SOC 2 (for service providers) and ISO 27001 (for information security management) both implicitly require controlling who has access to systems and data – hiring someone under false identity could be viewed as a failure of those controls.

If that person was not who was actually vetted, it might even violate audit requirements. We’ve seen cases where companies had to report incidents to authorities or customers because a fake employee constituted a security breach.

Moreover, companies have to navigate privacy and equal opportunity laws when implementing new anti-fraud measures. Verifying identities via video or biometrics has to be balanced with privacy regulations like GDPR (e.g., storing a candidate’s video for face recognition might require consent and clear purpose).

The Equal Employment Opportunity Commission (EEOC) in the U.S. also keeps an eye on hiring practices – while catching fraud is important, firms must ensure they don’t inadvertently discriminate or create undue hurdles for legitimate candidates. It’s a fine line. Interestingly, we are also seeing legal consequences for the fraudsters.

In India’s Infosys case, the impersonator was charged under new sections of law for cheating by personation. In the U.S., someone who assists others in such fraud (like the Nashville man who helped North Koreans get jobs under false identities) can face serious charges for conspiracy, wire fraud, or identity theft.

As the legal system catches up, being an interview proxy or deepfake creator for hire could land people in jail, which in turn should deter some from attempting it. For the companies, though, the immediate concern is compliance: demonstrating that they exercised due diligence in hiring.

In sectors like defense or finance, we may soon see regulations mandating stronger identity verification for remote hires. Companies that get ahead of that curve will avoid both the regulatory ire and the actual damage from fraud.

In sum, AI-driven interview fraud strikes at the heart of a company’s operations and trust. It can lead to hiring someone who empties your coffers or someone who just can’t do the job – both scenarios you desperately want to avoid. It can expose sensitive data or simply waste precious time.

And in all cases, it’s an affront to meritocracy and fairness in hiring; honest candidates are undermined when cheats prosper. This is why understanding and countering this trend is urgent for HR and security leaders alike.

To illustrate the reality of these threats, let’s look at a few case studies and examples of interview fraud in action – some real incidents, and some emerging patterns that show where things might be headed.

Case Studies & Examples

Real-world examples drive home how AI interview fraud plays out and the damage it can do. Here are three instructive case studies from recent years:

Case 1: Deepfake Infiltration of a Cybersecurity Company (2024)

“The KnowBe4 Incident.” One of the most eye-opening incidents occurred at KnowBe4, a U.S.-based cybersecurity training firm, in 2024. An individual applied for a remote software engineer role using the identity of a U.S. citizen – complete with a stolen Social Security number and an AI-doctored photo for the ID.

This person passed multiple video interviews and background checks by leveraging AI: the photo on their documents was a subtly AI-altered image derived from a stock photo, and they likely used tricks to mask their true appearance and location.

After being hired, this “employee” didn’t behave like a normal new hire. Within days, they began attempting to upload malware onto company systems. Thankfully, KnowBe4’s security team caught the suspicious activity. An investigation revealed the horrifying truth: the new hire was actually a North Korean hacker operating from abroad.

They had shipped the company’s laptop to an “IT mule” address and accessed it via VPN, even working U.S. hours to avoid detection. Essentially, a hostile actor managed to embed themselves inside a cybersecurity company, the very kind of organization that should be hard to fool.

The goal appeared to be espionage or financial gain (installing malware could have facilitated ransomware or data theft). This case was a huge wake-up call. It showed that even rigorous hiring protocols (interviews, reference checks, etc.) can be defeated by a well-planned deepfake identity.

The fallout prompted urgent review of remote hiring practices. KnowBe4 worked with law enforcement, and the incident tied into broader warnings by U.S. agencies about North Korea’s tactic of placing IT workers abroad under false identities.

For the industry, this example underscored that if it can happen to a security-savvy firm, it can happen to anyone. It’s a stark example of the national security implications of hiring fraud in the age of AI.

Case 2: Proxy Interview Scam at an IT Giant (2025)

“The Infosys Impostor.” In early 2025, Infosys – one of India’s largest IT services companies – found itself in the news for an internal fraud case that reads like a movie plot. A young engineering graduate from Telangana, India, was struggling to get hired due to poor English communication skills. So he tried a shortcut: he had a friend impersonate him in a virtual job interview for an Infosys position.

The friend, speaking fluent English and answering technical questions, secured the job offer on his behalf. The real candidate then joined Infosys in person for work. It took only about 15 days for colleagues and managers to smell something fishy. The new hire was nowhere near as articulate or competent as “he” had been in the interview.

In fact, coworkers were baffled how this individual cleared the interview at all. Infosys’ HR and security team did a retroactive check: they reviewed the video interview recording and saw that the person who appeared in that interview video simply wasn’t the same person now on the job (slight physical differences and the drastic drop in communication ability gave it away).

The employee was confronted and quickly confessed to the proxy ruse. Infosys not only fired him but also pursued legal action. The matter was reported to police, and an FIR (First Information Report) was filed. He was charged under sections of the Indian penal code for cheating and impersonation (Bharatiya Nyaya Sanhita sections 418 and 419, the revamped laws for fraud).

What’s more, the audacious impostor demanded he should be paid for the 15 days he did work after being caught, which obviously did not fly. This case became a cautionary tale across the Indian IT industry, where large companies then announced stricter verification steps and even lifetime blacklists for anyone attempting such fraud.

It highlighted the prevalent issue of “backdoor” hires via proxy in the highly competitive job market. And it demonstrates a simpler motive: not espionage, but a candidate trying to bypass personal shortcomings (in this case, language skills) by literally inserting someone else into the process.

The Infosys incident shows that robust on-boarding verification (such as matching ID photos to the person, or conducting a fresh quick re-interview on day one) might have prevented the fiasco. It also illustrates how proxy interview scams can defeat traditional hiring filters, forcing companies to implement multi-step identity checks to catch inconsistencies.

Case 3: Organized Proxy Networks and “Otter” Fraud (2024)

“The Proxy-for-Hire Underground.” Beyond individual incidents, there’s a broader phenomenon where interview fraud is occurring at scale via loosely organized networks online. In 2024, investigative reporting by Business Insider uncovered the inner workings of several proxy interview rings operating primarily through social media groups.

These groups, with names like “Technical Interviews Proxy Help” on Facebook, had thousands of members across the globe. Here’s how a typical case from those groups would go: A job seeker posts a request, specifying the interview topic (say, an AWS Cloud Architect role) and the time of their interview.

They mention tools – the common code word was needing an “Otter type proxy,” indicating they expect the use of Otter.ai for real-time transcript and answer feeding. Almost immediately, experienced proxy operators respond privately, negotiating a fee (which can range from a few hundred to a few thousand dollars depending on the job’s seniority and region).

The proxy and candidate then set up a strategy. In one example, for a coding interview on Zoom, the candidate logged in and introduced themselves, then feigned “bandwidth issues” and turned off video, allowing the proxy (connected via a second audio-only link) to take over answering technical questions.

In another, the candidate wore a discrete earpiece; the proxy listened to the interview on mute in real time (using a meeting link or phone call) and fed answers via a voice channel that went straight to the candidate’s ear, leveraging AI-generated answers to be safe.

These proxies often guarantee passing certain rounds, and some even had money-back guarantees. Business Insider’s report shared snippets of chats, one reading: “Tomorrow is interview. Need Otter proxy.” and a reply: “Available – have 5+ yrs exp in DevOps, will handle – DM”. Such professionalization means many candidates were getting away with it.

One proxy interviewed by BI said that he knew it was unethical but framed it as helping desperate job seekers who “just need to get in the door”. However, he also admitted it’s a cat-and-mouse game – some companies caught on and started demanding in-person final rounds, which proxies obviously can’t attend.

This organized proxy trend matters because it shows fraudulent interviewing has become a service one can simply buy, and that there’s a playbook for it (use Otter, use Telegram, etc.). It’s not limited to one company or one geography – it’s a widespread cheating economy.

Companies have begun monitoring these channels covertly, and a few stings have been set up, but by nature these groups are private and often shift platforms. The scale can be startling: some recruiters estimate that in certain hot tech job markets in 2023–24, up to 10–15% of remote interviews had some form of third-party assistance or impersonation (though exact figures are hard to pin down).

Case 3 isn’t a single incident but rather a composite of many that reveals the industrialization of interview fraud. It underscores that any comprehensive solution needs to address not just lone bad actors, but an entire underground ecosystem that keeps adapting.

Detection and Prevention: Fighting Back

Faced with the onslaught of AI-powered fraud, companies and solution providers are racing to develop countermeasures. 2026 has seen a surge in tools, techniques, and policies aimed at preserving interview integrity.

This section details how organizations are detecting fake candidates and preventing fraud, as well as the frameworks guiding these efforts.

1. Advanced AI-Based Detection Tools:

It takes an AI to catch an AI. A number of vendors have rolled out forensic AI tools that monitor interviews for signs of deepfakery or scripted answers.

Similarly, some HR tech companies have developed AI that can sense if a candidate’s spoken answer is likely generated by ChatGPT or another AI (by analyzing linguistic patterns). One early entrant, Phenom, built a feature that flags AI-generated interview answers by looking for semantic patterns that are “too perfect” or repetitive in a way human speech isn’t.

These tools might pop up an alert to the recruiter like: “Possible AI assistance detected in this response.” While not foolproof, they add a layer of defense. On the deepfake visual side, firms like Microsoft and Adobe have developed image forensics that can sometimes detect when a video frame has been computer-generated or if a person’s image matches known synthetic face datasets (some deepfake tools inadvertently leave “watermarks” in the pixel distribution, which specialized AI can catch).

Voice biometrics are also huge: banks have used voiceprints to authenticate customers, and now similar tech is offered for hiring. A candidate might be asked to provide a short recorded statement at application time; then during the live interview, their voice is continually compared to that baseline to ensure it’s the same person (an AI voice clone would ideally be flagged as not matching the natural variances of the original human).

These detection technologies are early-stage, and determined fraudsters try to test and outwit them (for instance, by training AI to sound more “natural” or inserting noise to fool detectors). Still, they are becoming an essential part of the hiring security arsenal.

2. Multi-Factor Identity Verification:

Companies are increasingly moving toward rigorous identity verification as part of hiring. This goes beyond the old standard of checking IDs on the first day. Now, some hiring processes include identity checks at multiple stages.

For instance, before a scheduled video interview, a candidate may be required to upload a government ID and a real-time selfie video through a secure portal. That selfie uses liveness detection (asking the person to turn their head or blink) to ensure it’s not just a photo being held up.

The ID is then matched to the selfie using facial recognition. This is akin to what many banks do for online customer onboarding, now repurposed for HR. Services offering “digital identity verification for hiring” have popped up.

While deepfakes could theoretically beat a static check, doing it live and unpredictably makes it harder for impostors to prepare. Another practice is verifying identity again at onboarding, and even a few weeks into the job (a quick video call to ensure the person still matches their ID and original interview). These multi-stage verifications make it less likely someone can slip through all the cracks.

In India, some IT firms now require new hires to physically visit a trusted third-party center for identity verification (fingerprint or Aadhaar biometric verification) before the remote work equipment is issued.

All these steps align with a zero-trust mindset: don’t assume the person you interviewed is who they say – test and confirm it explicitly.

3. Behavioral Analytics and Live Monitoring:

Technical signals can catch a lot, but human observation is still crucial. Recruiters and interviewers are being trained to spot behavioral red flags of fraud in real time. For example, unusually long delays before answers, as if the person is waiting for input, might indicate they’re using AI or getting help.

If a candidate’s eyes keep darting away (reading something) or they seem to be reciting rather than conversing, that’s noted. Interviewers might throw curveball follow-up questions that AI would struggle with, or ask the candidate to explain something in a different way to see if they actually understand it.

Some companies have started using controlled environments for testing: for coding exams or technical tests, they use proctoring software that records the screen and camera, and even locks the browser to prevent searching. This is similar to how remote academic exams are monitored.

There’s also the measure of requiring candidates to enable screen sharing during an interview, so the interviewer can see if they have any unauthorized apps open or if someone else is feeding them answers.

In critical interviews, a second interviewer might quietly observe (off camera) to watch for signs of coaching. Another clever tactic: requesting a 360° pan of the room with the webcam at the start, to ensure no one else is there and that the environment is normal (some have caught candidates who had post-it notes with prompts stuck around their screen this way).

Environmental cues like a candidate refusing to turn on video or frequently “disconnecting” conveniently when asked something spontaneous can be flags. Essentially, companies are formalizing what used to be gut intuition into a checklist of checks.

Even things like IP address tracking are used – e.g., if a candidate claims to be in California but the interview connection shows an IP from a country where many proxy scammers operate, that’s investigated.

All these behavioral and technical checks won’t stop 100% of fraud, but they raise the bar, often causing an unprepared fraudster to slip up or withdraw.

4. Policy and Compliance Frameworks:

Alongside tech solutions, organizations are updating their policies and aligning with compliance standards to combat interview fraud. Many are implementing strict background screening policies that explicitly include identity verification.

For instance, background check companies now offer “identity fraud screening” as part of pre-employment checks, which may involve database checks for synthetic identities or cross-verifying an applicant’s work history to ensure it’s real.

Companies handling sensitive data (finance, healthcare) are including statements in hiring policies that any misrepresentation in the hiring process is grounds for immediate termination – a deterrent clause. From a regulatory compliance perspective, firms are ensuring their anti-fraud measures respect privacy laws.

When biometric data is collected (voice, face scans), GDPR requires informing candidates and possibly obtaining consent, so legal teams have updated candidate privacy notices accordingly. In the U.S., some states like Illinois and Texas have biometric information laws that might cover these practices.

Equal opportunity is also considered: for example, companies must be careful that requiring an in-person interview (to prevent fraud) doesn’t unintentionally disadvantage disabled candidates who may not be able to travel. So there are often alternatives offered, like using a certified identity verification service in lieu of in-person for those who need accommodation.

Industry standards are evolving too. Under SOC 2’s Security and Integrity principles, auditors are starting to check if a company has controls to prevent unauthorized access via fake employees – this might include reviewing the hiring process for identity checks. ISO 27001 (information security) likewise has controls for HR security; in its 2022 update, it emphasizes verifying candidates’ identity and qualifications. So to maintain certifications, companies are formalizing these steps.

Another dimension is government guidance: agencies like the FBI have recommended measures such as at least one in-person meeting before hiring for critical role. Some companies have adopted that: even if all interviews are remote, the final offer may be contingent on a brief face-to-face verification (sometimes done via a local third-party, or requiring the candidate to come to a co-working space and show ID).

It’s a throwback in an era of remote work, but big firms are doing it – Google’s CEO publicly stated they reintroduced an in-person round to “make sure the fundamentals are there” precisely because of AI cheating concerns. On the compliance front, auditing and logging of the interview process has increased.

Companies now keep detailed logs: recording video interviews (with candidate consent) so they can review later if fraud is suspected, keeping track of IP addresses, device fingerprints of interviewees, etc. These records mean if someone tries a bait-and-switch (proxy at interview, different person shows up Day 1), there’s evidence to act on.

5. Training and Awareness:

A softer but crucial aspect is that HR staff, hiring managers, and even candidates are being educated about interview fraud. Companies are running training sessions for interviewers on how to spot deepfakes and proxies. For example, showing interviewers example clips of deepfake vs real candidates to sharpen their eyes, or teaching them about common proxy tactics (like the “bad connection” excuse).

Recruiters are learning to ask more validation questions. On the candidate side, some firms now include an attestation in the application: candidates must sign that they will not misrepresent themselves or use unauthorized aid, under penalty of disqualification. This at least sets a clear expectation and could dissuade those on the fence about cheating.

Professional associations and bodies (like the Society for Human Resource Management, SHRM) have started publishing guidelines and best practices to tackle interview fraud. The message is that ensuring “interview integrity” is now part of the HR mandate.

In summary, detection and prevention in 2026 is multi-pronged: use AI to catch AI, verify identity at every turn, observe keenly during interviews, and solidify policies so everyone knows the rules of engagement. Companies at the forefront combine technology (like deepfake detection, voice biometrics) with process (like surprise ID checks, recorded assessments) and policy (compliance and consequences for fraud).

And while these defenses are growing, the war is far from over – as fraudsters will undoubtedly adapt. That leads us to consider what lies beyond 2026. In the next section, we’ll gaze into the future: how might this cat-and-mouse game evolve over the coming decade, and what new challenges and solutions are on the horizon?

Beyond 2026: The Next Decade of Interview Integrity Challenges

Looking ahead 5–10 years, we can anticipate both escalation in fraud tactics and evolution in defenses. The hiring landscape in 2030 might involve technologies barely mainstream today. Here are some forward-looking projections for the future of AI interview fraud and its countermeasures:

AI vs AI: An Arms Race Intensifies:

We are likely entering a period of AI-vs-AI battles in the hiring process. As companies deploy AI-driven candidate verification, fraudsters will respond with more sophisticated AI to evade detection.

For example, if voice biometrics become standard, fraudsters might train their voice clones to include dynamic variation to better mimic “liveness.” If AI is checking for ChatGPT-style answers, candidates will use more advanced language models fine-tuned to their own speaking style, so the answers sound authentically imperfect.

We might see Generative Adversarial Networks (GANs) specifically trained to fool detection algorithms – essentially, deepfake tech designed to defeat deepfake detectors. This is akin to spam email senders constantly adapting to spam filters.

In hiring, it could mean ever-more-convincing fake voices and faces. However, detection AI will also improve – perhaps by using multi-modal verification (cross-checking audio, video, and even the content of answers for consistency).

There’s research into using keystroke dynamics (how a person types or moves their mouse) as a biometric; by 2030, an interview platform might quietly monitor a candidate’s typing patterns when they take a technical test, which are hard for an AI to fake if not directly controlled.

Another aspect of AI vs AI is the emergence of real-time deepfake detection embedded in cameras or conferencing tools – imagine a future Zoom update that can alert all participants, “Possible synthetic video detected for User X.” Companies like Microsoft, Adobe, and startups are working on digital provenance (watermarking authentic media and detecting fake ones).

Within a decade, it’s plausible that all genuine video streams will carry cryptographic authenticity signatures, making deepfakes easier to spot if they lack that signature. But then, of course, counterfeit signatures could arise.

This cat-and-mouse dynamic will define the next era of hiring security. HR departments might even have their own AI “bot” sitting in on interviews, quietly analyzing everything in the background – an AI auditor ensuring the candidate is human and truthful.

New Fraud Frontiers: Holograms, AR/VR and Beyond:

By the late 2020s, the way we meet and interview might itself change, with technologies like augmented reality (AR), virtual reality (VR), and holographic telepresence becoming part of business communication. These open new avenues for impersonation. Imagine “holo-interviews” where a life-size hologram of a candidate appears in a conference room – could that hologram be manipulated by AI? It’s not far-fetched. Already, companies like Meta are exploring virtual meeting spaces. In a VR interview, one might appear as an avatar.

What if a fraudster feeds an AI system to drive that avatar’s facial expressions and answers? It could be the deepfake of the future: an interactive 3D avatar controlled by an AI or a remote proxy. Holographic calls (which some tech giants are piloting for high-end conferencing) could similarly be hijacked with deepfake overlays.

So recruiters in 2030 may have to worry about whether the hologram sitting across the table is real. AR glasses could be used by candidates to display info invisibly in their view – more advanced than today’s second monitor.

As consumer AR tech (like smart contact lenses or lightweight glasses) matures, candidates could literally have a live transcript and AI suggestions projected in front of their eyes during an in-person interview, making cheating possible even face-to-face.

We might see the rise of “AR interview cheating” where nothing visible tips off the interviewer, because all the assistance is in a private heads-up display. Countering that might require devices that detect other electronics on a person (some kind of scanner for signals, which veers into very sci-fi/creepy territory). Or simply a policy of device-free physical interviews (no glasses, no wearables allowed in the room).

Brain-computer interfaces are another far horizon – if candidates could someday use implants or neural links to query AI silently, then we are really in a new game of cat-and-mouse. These scenarios may sound like science fiction, but so did deepfake video not long ago.

The bottom line: as the formats of interaction evolve, fraud will evolve with them, and companies will need to anticipate that.

Stricter Regulations and Laws:

We expect the legal landscape to catch up significantly by 2030. Governments will likely enact laws specifically addressing AI impersonation and hiring fraud. For example, we may see statutes making it explicitly illegal to use AI deepfakes to gain employment under false pretenses (essentially a form of criminal fraud).

Some jurisdictions could impose penalties on service providers (the proxy hire “agencies” or creators of malicious deepfakes). On the flip side, regulations around AI in hiring might also expand to protect candidates – ensuring that any AI-based assessments or verifications are fair and transparent. Already, places like New York City have laws about bias audits for AI hiring tools.

By 2025, all 50 U.S. states had at least considered legislation on AI and deepfakes, mostly around election interference and explicit content, but the scope is widening. It’s plausible that by late 2020s, background check laws could mandate identity verification steps for remote hires in certain sectors (similar to how financial KYC – Know Your Customer – rules work).

Europe’s AI Act (set to be one of the first comprehensive AI regulations) might classify hiring-related deepfakes under high-risk AI practices, requiring disclosure or specific controls. Industry standards may also emerge: for instance, the banking industry could establish protocols for vetting hires that include specific anti-deepfake measures (the American Bankers Association and FBI already released an infographic in 2025 to raise awareness on deepfake scams).

Companies that do business with the U.S. Department of Defense or other sensitive government branches might be required via contract to certify their hiring process authenticity. If (or rather when) a major breach or scandal occurs due to a fake hire – imagine a fake employee in an intelligence agency or a huge fraud at a Fortune 500 – there will surely be a regulatory response.

We might also see more litigation on the corporate side: companies suing individuals who defrauded them via fake identities, making examples of them. Conversely, there’s a slim chance of candidates suing companies if wrongly flagged as deepfakes (e.g., if a poor detection tool costs someone a job unfairly) – which will drive the need for accuracy and care in deploying these systems.

Ethical AI and Verification Ecosystems:

In the next decade, an ecosystem of verification might flourish. One vision is digital identities or “career passports” that are cryptographically secured – think along the lines of blockchain-backed credentials for education, work history, and personal identity. Instead of a static resume, a candidate might present a digitally signed record of their diploma from a university, references from past employers, etc., which are hard to forge.

Startups are working on decentralized identity solutions that could make it easier to trust someone’s claims without invasive checks, because each credential is verified at the source. Governments, too, could play a role by offering robust digital ID systems (some countries already have national IDs with biometric checks that could be adapted for employment verification).

In an ideal scenario, by 2030 a recruiter could instantly verify that “Alice” is really Alice, she earned the degree she claims, and her professional certifications are legit – all through an encrypted digital wallet of credentials.

This would make impersonation extremely difficult, unless the impostor somehow also stole all those credentials (which is possible, but another layer of complexity for them). Of course, privacy concerns come hand in hand – not everyone will want such a system if it feels like constant surveillance of one’s credentials.

Balance will be needed, perhaps giving candidates control over what they share, but the authenticity is checkable. On the AI side, companies will likely implement continuous verification post-hire for critical roles. That might involve periodic checks or monitoring (with consent) to ensure the person in the role hasn’t switched.

For instance, some security-critical workplaces might periodically require a biometric login (face scan) to ensure the employee logging into systems is the same person who was vetted. We already have this concept for account security (2FA, etc.), so extending it to personnel security is conceivable.

Cultural and Organizational Changes:

Beyond tech and law, the very culture of hiring might shift. We might see a pendulum swing back to more in-person interaction as a norm for high-stakes hires – even if remote work stays popular, companies may mandate a final in-person meeting or onboarding week to physically verify and build trust.

There could also be a greater emphasis on probationary periods and monitoring performance early on as a safety net – essentially, verifying through work outputs. In fields like software engineering, some companies might move toward project-based evaluation (paid trial projects) to confirm ability, since even an interview can be gamed but real work is harder to fake over time.

Also, HR might adopt what cybersecurity has: a concept of “assume breach.” Translated, that means assume a fake might slip through and have safeguards ready – like limiting new employees’ access privileges initially, or conducting thorough code reviews and audits of a new hire’s work until trust is established. These practices could contain the damage if an impostor somehow gets in.

On the flip side, the prevalence of AI assistance might also spark debate: should minor AI use truly be considered “cheating” or just using available tools? By 2030, perhaps using AI for some interview answers might be normalized (just as calculators are allowed in some exams).

Companies could then shift evaluation towards assessing the candidate’s ability to effectively use AI rather than outright banning it. We already hear arguments that if a candidate can do a job with AI tools, maybe that’s fine – as one proxy service founder rationalized, “If they can use AI to crush an interview, they can continue using AI to become a top performer in their job”.

The counterargument is that interviews are about evaluating unassisted capability and honesty. This philosophical debate will shape policies too. We might even see some companies explicitly permit AI assistance and adjust their questions to focus on creativity, strategy, or personal experience that AI can’t easily supply. Meanwhile, others will double down on ensuring a person can operate without AI crutches.

In summary, the next decade will likely see more complex impersonation technology (holographic deepfakes, AI avatars) but also more robust ecosystems of trust and verification. It’s an arms race, but also a time for rethinking how we assess talent in an AI-infused world.

Ultimately, organizations that adapt by blending technological defenses with smart hiring design will be best positioned to maintain interview integrity in the face of whatever 2030 brings.

Best Practices for Companies: Protecting the Hiring Process

Given the high stakes, what concrete steps can organizations take today to combat AI-driven interview fraud and ensure interview integrity?

Below is a set of best practices – actionable measures for HR, Talent Acquisition (TA), and compliance leaders to implement:

1. Strengthen Identity Verification at Every Stage

Pre-interview ID checks: Verify candidate identity early. Require candidates to submit a photo ID and a real-time selfie video through a secure verification service before interviews. Use liveness detection (ask them to perform a random action on camera) to ensure the person is real and matches the ID. This deters many fraudsters from even attempting a deepfake.

Controlled interview links: Send unique, non-shareable video interview links tied to the candidate’s identity (some platforms ensure the link can only be used once or requires logging in). This makes it harder for a proxy to join without detection.

Surprise verifications: During a video call, consider asking the candidate to hold up their ID or answer a security question (like last 4 of a government ID, or details from their resume) spontaneously to catch proxies off guard.

Onsite/third-party verification for remote hires: For fully remote onboarding, use a third-party identity verification (e.g., notary or designated office) where the new hire must present themselves and their documents in person as a condition of employment. Alternatively, have a brief video call on their first day with HR purely to re-verify identity.

Biometric sign-ins: For highly sensitive roles, use biometric authentication at login (fingerprint or face recognition on their company-issued device) so that if someone other than the vetted person tries to log in, it’s flagged.

2. Upgrade Interview Techniques and Formats

Use structured, in-depth interviews: Ask questions that AI or a proxy would struggle with. For instance, behavioral questions that require personal anecdotes (“Tell me about a time you failed and what you learned”) can reveal inconsistencies if someone is making it up or if it’s a proxy who doesn’t actually have the candidate’s life experience. AI also tends to give generic answers here, which are easier to spot if you’re looking.

Follow-up questions are your friend: Train interviewers to always follow a candidate’s answer with deeper probing. A proxy or AI might handle surface questions, but real-time follow-ups (“Why did you choose that approach?,” “Can you explain that concept in simpler terms?”) can break the script. Many fraudsters are thrown off script when the interview becomes dynamic.

Pair interviews (two interviewers): Having multiple interviewers in the session can help – one can focus on asking questions while another observes the candidate’s body language and environment. It also makes it harder for a candidate to concentrate on cheating with more eyes on them. Collaborative panel interviews (with technical staff present) might catch technical bluffing more easily as well.

Leverage practical tests: Whenever feasible, incorporate live practical assessments. For example, a coding exercise in a shared online editor, or a writing test on camera. Monitor that the candidate is doing it themselves. Because it’s hard for someone to fake performing a task in real time if they don’t have the skill. If concerned about covert help, consider proctoring software or recording the session for review of any suspicious pauses or external noises.

Environment scan: At the start of remote interviews, politely ask the candidate to do a quick 360° pan with their webcam or phone. Say it’s to check for any technical issues or just to have a friendly icebreaker (there’s an element of trust-building too). This can reveal if they have notes plastered everywhere or someone else in the room. It’s not foolproof, but it sets a tone that you take the process seriously.

No device, no AI declarations: For in-person interviews, consider having candidates put away smartwatches or phones (similar to how some exams require). For remote interviews, you can’t enforce what’s off-screen, but you can request, “Please silence other devices to minimize distractions.” Some firms even ask candidates to verbally confirm they are not using any unapproved assistance tools at the start of the interview – it puts them on notice ethically (lying explicitly adds weight to any later action if they’re caught).

Record and review: With consent, record important interviews. If something feels off, review the footage. You might catch subtle cues (e.g., lip movements not matching voice). Also, recordings can be run through deepfake detection tools after the fact if suspicions arise. This helps in making a case to rescind an offer if needed, and it’s a treasure trove for training your recruiting team on what to look for (just ensure you handle recordings per privacy guidelines).

3. Deploy Technology Wisely

AI-assisted fraud detection software: Invest in tools that integrate with your video interview platform to monitor for fraud signals. These tools can provide real-time alerts or post-interview analysis. Even if you get a “risk score” after the interview, it can prompt a follow-up verification before a final offer.

Screen monitoring: Use interview platforms that allow you to see if a candidate clicks away from the interview window (some proctoring software can do this during online tests). If a candidate’s screen shows unusual activity (like rapidly switching windows or copy-pasting text), those platforms can flag it. For high-stakes technical interviews, consider remote desktops or coding environments that lock down browsing.

Device fingerprint and IP checks: Behind the scenes, track some metadata. If the same device or IP address is being used by multiple different “candidates”, you might have a proxy ring applying. Or if a candidate consistently claims U.S. residency but their IP resolves to another country during interviews, that’s a red flag (allow for VPNs, but you can still question it). Some applicant tracking systems (ATS) now incorporate fraud signals to spot patterns across applicants.

Digital credential verification: Whenever possible, verify credentials directly. Use services that can pull transcripts from universities or certification bodies to ensure that the documents candidates provide are legit and have not been tampered with by AI. Blockchain-based credential verification, if available, can make this easier. For example, some universities issue digital diplomas you can verify on their site – take advantage of that rather than accepting a PDF that could be forged.

4. Policy, Communication, and Deterrence

Clear communication and deterrence: Let candidates know upfront that your company has strict anti-fraud measures. Include a note in the interview instructions that any form of misrepresentation or use of unauthorized aid in the interview will result in disqualification and possibly being blacklisted from future opportunities. Sometimes just knowing that the company is watching for fraud will discourage attempts. (However, don’t reveal exactly what detection methods you use – keep fraudsters guessing).

Employment agreements: Consider adding a clause in offer letters or contracts affirming that the individual who interviewed is indeed the person who will be employed, and that all statements in the hiring process were truthful. While it might not stop a determined fraudster, it adds legal weight – if later found out, that’s a breach of contract or grounds for immediate termination for cause.

Internal policies and training: Update your hiring policies to codify these new practices (ID checks, etc.) so they’re applied consistently. Train every recruiter and hiring manager on these policies. Share known fraud scenarios in internal newsletters or meetings to keep awareness high (e.g., “Tip of the week: Watch for candidates wearing high-quality headphones covering their ears – could they be hiding earbuds feeding answers?”). Develop a fraud escalation path: if an interviewer suspects something, they should know how to discreetly alert HR or a hiring security specialist to investigate further before making a hiring decision.

Compliance check: Ensure all these measures comply with relevant laws. Involve your legal/privacy team to review things like recording interviews or scanning IDs (some jurisdictions have specific requirements for storing ID info, etc.). When done correctly, compliance and fraud prevention go hand in hand – for example, verifying identity also helps fulfill requirements to prevent hiring individuals who might be on sanctions or watch lists.

5. Post-Hire Monitoring and Response

Probation and performance monitoring: Implement a robust probation period for new hires where their work outputs and engagement are closely observed. If someone breezed through the interview but is completely lost on the job, act quickly. It’s kinder to all parties to address a fraudulent hire early. Some companies do a mini “re-interview” or skills assessment during onboarding as a second filter. In sensitive roles, you might schedule a surprise technical review or ask them to present on a project in their first weeks to validate their expertise.