Back to all blogs

Discover how candidates cheat in remote interviews, from live AI assistance to proxies, and learn practical ways to detect the warning signs.

Abhishek Kaushik

Jan 13, 2026

Remote interviews are convenient, but they come with challenges. Without being in the same room, it’s easier for candidates to get help or take shortcuts without being noticed.

Cheating isn’t just about dishonesty. It can lead to bad hires, wasted time, and high costs. One mis-hire can cost a company thousands of dollars in recruitment, onboarding, and lost productivity. The U.S. Department of Labor estimates that a bad hire can cost your business 30 percent of the employee’s first-year earnings.

The problem is getting bigger. Tools like AI assistants, proxies, and screen-sharing tricks make it harder to tell what a candidate can really do. Relying only on speed, polished answers, or correct output is no longer enough.

To run fair and effective interviews, hiring teams need to understand the types of cheating and what signs to look for. Knowing this helps ensure candidates are evaluated on their real skills, not on shortcuts.

Types of Cheating in Remote Interviews

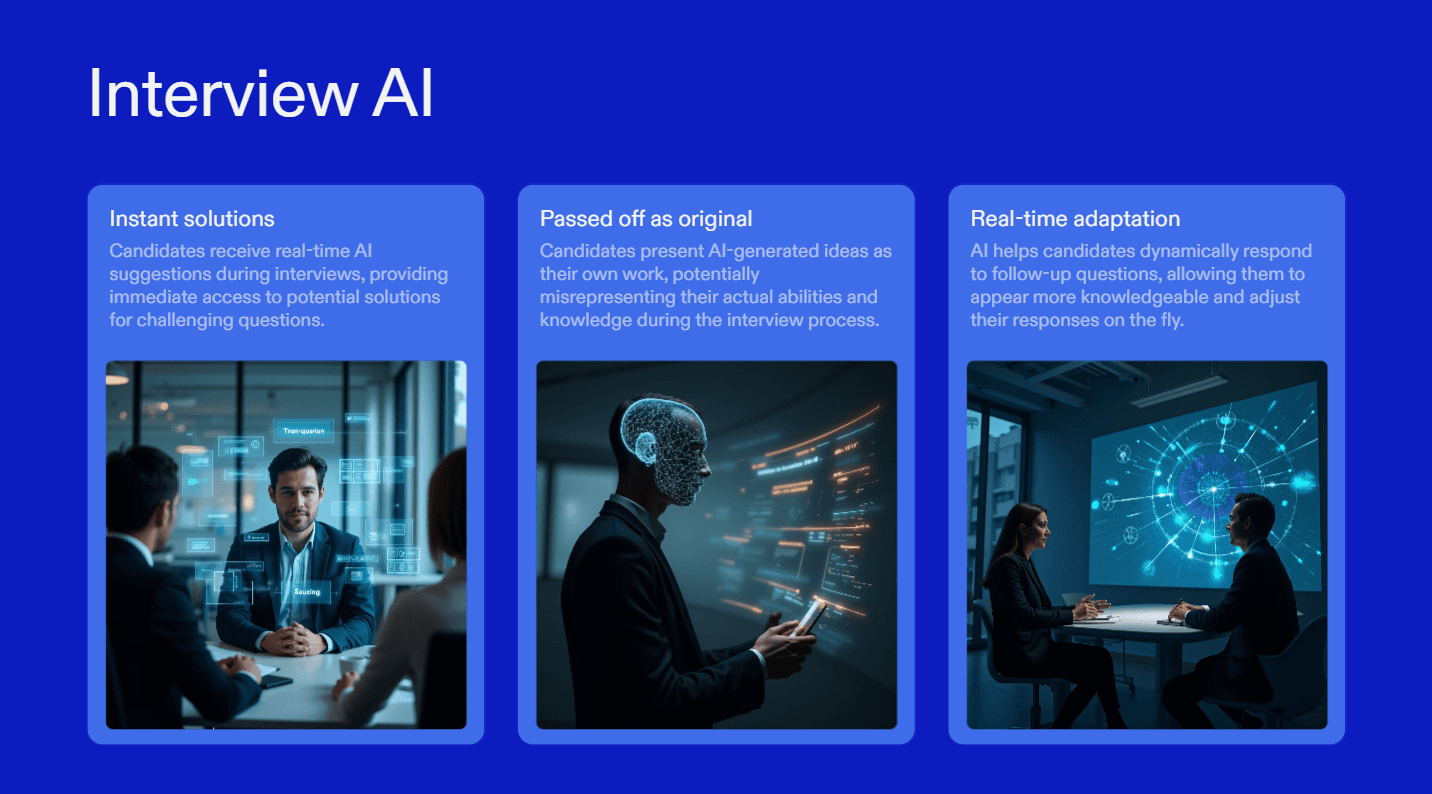

1. Live AI Assistance

AI-powered tools like Interview Coder or Cluely are designed to provide candidates with real-time help during interviews. They can generate solutions, suggest code snippets, or guide a candidate through problem-solving step by step. From the interviewer’s perspective, the candidate may appear confident, competent, and fast, even if they aren’t thinking through the problems themselves.

How it works in practice:

The candidate reads a question and the AI suggests a solution almost instantly.

The candidate copies or adapts the suggestion, presenting it as their own reasoning.

When follow-up questions or changes are introduced, the AI generates updates in real time, helping the candidate appear adaptable.

Why this is risky for hiring teams:

False assessment of ability – The candidate’s output looks correct, but it doesn’t reflect their problem-solving skills or decision-making process.

Missed red flags – Interviewers can’t see hesitation, trade-off thinking, or incremental reasoning that usually reveals true capability.

Unfair advantage – Candidates who rely on AI appear smoother and faster than those solving problems independently, potentially disadvantaging honest candidates.

Behavioral and interaction signals that indicate AI assistance:

Sudden bursts of flawless output after long pauses.

Explanations that sound generic, rehearsed, or inconsistent with the code.

Inability to step through or debug code they just “wrote.”

Strange timing patterns: consistent typing or code submission that doesn’t match natural thinking speed.

Live AI assistance doesn’t just give answers, it changes the signals interviewers rely on to judge skill. Without detection, interviews no longer reflect true ability, increasing the risk of mis-hires and wasted time.

👉 Explore How to Detect and Prevent Cluely AI in Interviews

2. Proxy Candidates

Proxy candidates occur when someone other than the applicant completes the interview or assessment. This can happen in live video calls, coding platforms, or take-home assignments. Unlike AI tools, this involves a real person solving problems on behalf of the candidate.

Common methods:

Video proxy: Another person appears on camera or speaks on the candidate’s behalf.

Collaborative remote control: The proxy connects to the candidate’s device via screen-sharing tools or remote desktop software to complete tasks in real time.

Shared accounts: The proxy submits take-home assignments or coding exercises under the candidate’s name.

Why it’s hard to detect:

Skill mismatch: The candidate may struggle with follow-up questions or explanations if the proxy performed most of the work.

Behavioral inconsistencies: Sudden changes in typing speed, coding style, or verbal explanations can signal someone else is contributing.

Confidence gaps: When asked to explain reasoning, the candidate may hesitate, provide vague answers, or contradict earlier statements.

Behavioral and interaction signals to watch for:

Sudden spikes in performance compared to past assessments or practice sessions.

Inconsistent coding style or formatting across tasks.

Difficulty answering basic questions about code logic or design decisions.

Hesitation or confusion when asked to modify code live or solve related problems.

Proxy candidates can make a candidate appear capable on paper while hiding their actual skill level. Detecting this requires observing reasoning, explanation, and interaction patterns, rather than relying solely on output. Without such checks, interviews risk mis-hiring individuals who cannot perform independently on the job.

👉 Read more: Proxy Interviews Explained - How They Work & Why They’re Rising

3. Screen Sharing or Collaboration Misuse

Some candidates get external help during remote interviews by sharing their screen or collaborating with others. This goes beyond AI tools or proxies, it involves real-time human assistance that can be hidden in plain sight.

How it happens:

Screen-sharing apps: Candidates share their screen with a friend or mentor who provides guidance during the interview.

Chat or messaging tools: Candidates receive step-by-step instructions through chat, Slack, or other messaging platforms.

Collaborative coding platforms: Multiple people can contribute to a solution in real time while the candidate appears to be working alone.

Why this is risky:

False impression of competence: The candidate may appear to solve problems efficiently, even if they are not doing the thinking themselves.

Hard to detect: Unlike proxies or AI assistance, this method leaves fewer obvious traces. It relies on subtle behavioral inconsistencies.

Misaligned evaluation: Interviewers may overestimate problem-solving skills, decision-making ability, or creativity.

Behavioral and interaction signals to watch for:

Typing patterns that don’t match natural pauses for thinking.

Inconsistent explanations that contradict the work being submitted.

Sudden changes in performance when follow-up questions or live debugging tasks are introduced.

Hesitation or confusion when asked to explain reasoning for a small change in the solution.

Screen-sharing or collaboration misuse can undermine the integrity of an interview without leaving obvious signs. Detecting it requires focusing on how candidates think, explain, and interact, not just the quality or speed of the final solution. Teams that fail to spot this risk mis-hires and lose confidence in their remote interview process.

4. Plagiarism of Take-Home Assignments

Take-home assignments are meant to evaluate a candidate’s independent skills. However, some candidates copy solutions from online repositories, forums, or peers, presenting them as their own work. Unlike live cheating, plagiarism can be subtler, but it still misrepresents a candidate’s true ability.

Common ways it happens:

Copying from code repositories such as GitHub or Stack Overflow.

Using peer solutions from classmates, colleagues, or forums.

Reusing past submissions from other companies or assessments.

Detection signals to watch for:

Inconsistent coding style: Formatting, variable names, or commenting style that doesn’t match the candidate’s other work.

Sudden skill spikes: Assignment solutions that are far beyond the candidate’s demonstrated abilities in interviews or past assessments.

Generic or overly polished code: Solutions that follow textbook patterns without showing reasoning or creativity.

Difficulty explaining code: The candidate struggles to justify design choices, algorithms, or logic in their own submission.

Impact on hiring teams:

Creates a false sense of competence, leading to mis-hires.

Reduces confidence in remote assessments and evaluation processes.

Wastes time and resources onboarding candidates who cannot perform independently.

Even when candidates submit correct solutions, ownership and reasoning matter more than correctness alone. Teams need to combine careful review, follow-up questioning, and behavioral signals to detect plagiarism effectively.

5. Deepfake and Identity Manipulation

Deepfake technology is increasingly being used to manipulate a candidate’s identity during remote interviews. This goes beyond traditional proxies by altering audio or video in real time to make it appear as though the real candidate is present.

How it happens:

Face-swapping tools replace the candidate’s face with another person’s face during live video interviews.

Voice-cloning software modifies speech to sound like the candidate while someone else speaks.

Pre-recorded or looped video feeds are combined with live audio to simulate presence.

Why this is risky:

Interviewers believe they are interacting with the actual candidate when they are not.

Traditional identity checks like camera-on requirements become ineffective.

Skills, communication style, and even personality can be completely misrepresented.

Behavioral and interaction signals to watch for:

Subtle lag between lip movement and audio responses.

Facial expressions that appear unnaturally smooth or repetitive.

Limited head movement or avoidance of sudden camera-angle changes.

Audio clarity that remains unnaturally consistent regardless of environment or movement.

Deepfake-based cheating undermines the basic trust of remote interviews. Without identity verification and behavioral analysis, teams risk hiring someone they never actually interviewed.

6. Prompt Engineering and Preloaded AI Workflows

Some candidates prepare elaborate AI workflows in advance, allowing them to feed interview questions into pre-built prompts that generate structured answers instantly. Unlike basic AI assistance, this method is highly optimized and difficult to detect at a surface level.

How it works:

Candidates create reusable prompts tailored for coding, system design, or behavioral questions.

During the interview, they paste the question and receive well-structured answers in seconds.

Outputs are refined to sound conversational and confident.

Why this is risky:

Answers appear thoughtful but lack original reasoning.

Candidates can handle common questions but fail when context shifts.

Interviewers mistake structured language for competence.

Behavioral and interaction signals to watch for:

Answers that follow identical structures across unrelated questions.

Overly polished explanations with little hesitation or self-correction.

Difficulty responding when asked to reason without typing or external tools.

Struggles with open-ended “what would you do next?” questions.

Preloaded AI workflows blur the line between preparation and real-time cheating. Without probing reasoning depth, interviews reward prompt quality instead of skill.

7. Audio-Only or Off-Camera Assistance

Some candidates keep cameras on but rely on off-camera helpers providing verbal cues or instructions through earphones or nearby speakers. This form of cheating is subtle and often mistaken for confidence or fluency.

How it happens:

A helper listens to the interview and whispers answers or guidance.

Candidates wear discreet earbuds or bone-conduction devices.

Audio cues guide problem-solving or explanation flow.

Why this is risky:

The candidate appears responsive and articulate.

Help is delivered in real time with minimal delay.

Visual monitoring alone fails to catch the behavior.

Behavioral and interaction signals to watch for:

Slight delays before responses, followed by unusually precise answers.

Eyes shifting or pausing as if listening before speaking.

Changes in tone or pacing mid-answer.

Inconsistent depth when asked to expand or rephrase an explanation.

Off-camera assistance creates the illusion of competence while hiding dependency. Detecting it requires attention to timing, responsiveness, and reasoning continuity.

8. Memorized or Rehearsed Solution Playback

Some candidates rely on memorized solutions sourced from leaked question banks, prior assessments, or shared interview experiences. While not technically live cheating, it still distorts evaluation.

How it happens:

Candidates memorize exact solutions to common interview questions.

They reproduce answers quickly without active problem-solving.

Explanations sound confident but lack flexibility.

Why this is risky:

Interviewers mistake recall speed for skill.

Candidates fail when the problem is slightly altered.

Real-world problem-solving ability is never tested.

Behavioral and interaction signals to watch for:

Immediate answers with no exploratory thinking.

Difficulty adapting when constraints or requirements change.

Repetition of textbook phrasing or well-known patterns.

Weak responses to “why” or “what if” follow-ups.

Memorized playback rewards exposure, not capability. Without adaptive questioning, interviews fail to distinguish learning from understanding.

Why Traditional Interview Safeguards No Longer Work

Many hiring teams still rely on basic safeguards like camera-on policies, screen monitoring, or strict interview rules. These methods were designed for a simpler time and no longer match how cheating actually happens today.

Where traditional safeguards fall short:

Camera-on requirements fail against deepfakes, video proxies, and off-camera assistance.

Screen monitoring does not detect AI copilots running on secondary devices or external browsers.

Rule-based flags like eye movement, background noise, or tab switching are easy to bypass.

Interviewers cannot manually track timing, behavioral shifts, and reasoning consistency while conducting live interviews.

As cheating becomes more adaptive and less visible, surface-level controls create a false sense of security. The interview looks clean, but the signal is already compromised.

This gap between perceived fairness and actual integrity is where most mis-hires occur.

How Sherlock AI Detects What Humans and Rules Miss

Sherlock AI does not attempt to guess intent or accuse candidates. Instead, it establishes a baseline of natural behavior and continuously measures deviations as the interview unfolds.

Sherlock AI analyzes:

Micro-latency changes when questions evolve or constraints shift.

Reasoning-to-action alignment, detecting when explanations and execution drift apart.

Behavioral continuity across multiple tasks, not just a single response.

Timing patterns that reveal external dependency rather than independent thinking.

Unlike static rules, Sherlock AI adapts to:

Different roles and difficulty levels.

Candidate experience variance.

Interview formats including coding, system design, and problem-solving rounds.

This makes Sherlock AI resilient to new cheating methods, including tools that have not yet been widely documented.

For hiring teams, this means:

Early signals instead of post-interview suspicion.

Objective patterns instead of subjective gut feel.

Fewer false positives and more confident decisions.

Sherlock AI becomes the behavioral system of record for remote interviews.

Practical Next Steps for Interviewers

1. Design interviews around reasoning and adaptability

Interviews should test how candidates think, not how quickly they reach a correct answer. When interviews focus only on output, they are easy to game with AI tools, proxies, or external help.

What to change in practice:

Ask candidates to talk through their thinking before they write or submit anything.

Introduce mid-problem changes and see how they adapt instead of letting them finish a polished solution.

Ask “why” questions: why they chose a specific approach, trade-off, or assumption.

Use follow-ups that cannot be memorized, such as edge cases, constraints, or alternative paths.

What this reveals:

Genuine candidates can explain decisions, correct mistakes, and adjust their approach.

Candidates relying on AI or external help struggle when the path changes or when asked to justify choices.

When interviews reward reasoning and adaptability, shortcuts become obvious and real skill stands out.

2. Incorporate scenario-based or incremental tasks

Static questions with a single correct answer are easy to solve with external help. Scenario-based and incremental tasks make cheating much harder because they require continuous thinking, not just final output.

What to do differently:

Break problems into small steps instead of assigning one large task.

Change requirements midway and ask the candidate to adjust their approach.

Introduce real-world constraints like limited time, incomplete information, or conflicting goals.

Ask the candidate to explain what they would do next, not just what they already did.

Why this works:

AI tools and external helpers struggle with shifting context.

Proxy or assisted candidates often fall behind when the task evolves.

Genuine candidates stay engaged and can explain their decisions at each step.

What to watch for:

Delays when new constraints are introduced.

Confusion about previous decisions they supposedly made.

Answers that reset instead of building on earlier reasoning.

Incremental tasks expose whether the candidate is actively thinking or reacting to external input.

3. Use pattern-based monitoring tools to detect subtle signals

Most interview cheating today is not obvious. Candidates using live AI tools, collaborators, or proxies don’t break rules loudly. Instead, they leave behind behavioral patterns that are hard to spot manually, especially in long or fast-paced interviews.

This is where Sherlock AI becomes critical.

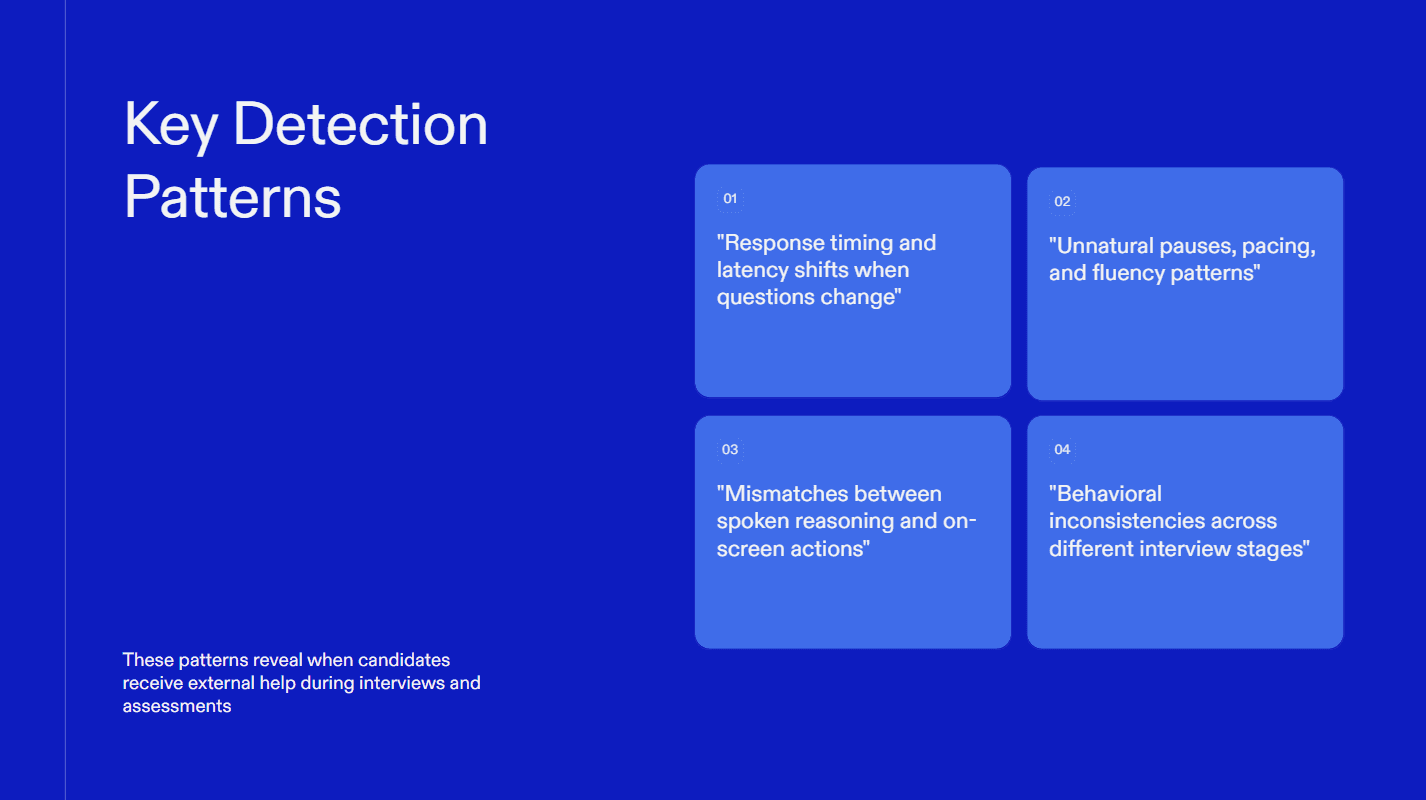

Sherlock AI is built specifically to detect pattern-level inconsistencies that indicate external assistance, without relying on fragile rules or surface checks. It analyzes:

Response timing and latency shifts when questions change unexpectedly.

Pauses, pacing, and fluency patterns that don’t align with natural problem-solving.

Mismatch between spoken reasoning and on-screen actions, such as confident explanations paired with delayed or inconsistent execution.

Behavioral continuity across interview stages, revealing sudden shifts that suggest assistance.

Why Sherlock AI works for hiring teams:

Interviewers can’t track micro-patterns while also running the interview.

Static rules like eye movement or background noise are easy to bypass.

Pattern-based analysis becomes stronger over time and across sessions.

How this changes interviews:

Interviewers get contextual signals, not accusations.

Teams can probe deeper at the right moment instead of guessing after the fact.

Decisions are based on how candidates behave and reason, not just how polished the final output looks.

As cheating methods evolve, detection has to move from rules to patterns. Sherlock AI gives hiring teams the visibility they need to preserve fairness, reduce mis-hires, and trust their interviews again.

Conclusion

Remote interviews are only effective when they reflect a candidate’s real skills. As live AI tools, collaboration shortcuts, and proxy methods become more common, traditional interview signals are easier to manipulate.

To keep hiring fair and reliable, teams need to move beyond judging speed or polished answers. Interviews must focus on reasoning, adaptability, and consistent behavior over time. This requires better interview design, sharper follow-ups, and attention to subtle interaction patterns.

When interviews are structured around how candidates think and respond in real time, it becomes much harder to fake ability. The result is fairer evaluations, stronger hires, and greater confidence in remote hiring decisions.

Read more: How Sherlock AI protects your hiring process from interview fraud.