Ask this simple question in interviews to spot AI-coached answers and verify real experience. Learn how to detect fraud and improve hiring accuracy.

Abhishek Kaushik

Dec 26, 2025

AI-coached answers sound fluent, polished, and structured.

But they collapse the moment you ask this one question:

“What changed?”

This question instantly reveals whether the candidate:

Has real, lived experience

Or is reciting an answer generated by ChatGPT or a coaching service

Because real experience contains adaptation, not just information.

Why AI-Coached Answers Sound Convincing

Modern AI can generate:

Perfectly structured frameworks

Confident tone

Clean bullet points

Process sequences that sound senior

But it cannot generate lived adaptation because:

Real-world work contains inconsistencies

Decisions evolve with constraints

Priorities shift mid-project

Tradeoffs are imperfect and contextual

As highlighted in Artificial Intelligence and the limits of reason (2025), AI reasoning is ultimately statistical pattern-matching. It cannot step outside its training data to handle real-world unpredictability or novel edge cases.

AI answers are straight lines.

Real experience is messy.

The Question That Breaks the Script

After the candidate gives a polished answer, say:

Thank you. What changed?

Then pause.

Let silence do the work.

Why This Works

Real contributors can describe:

Shifts in requirements

Mistakes they corrected

Blockers they handled

Surprises that forced adaptation

Tradeoffs that were not obvious upfront

AI-sourced answers cannot.

Because the AI is describing:

A textbook solution

Not an actual event

How to Use the Question in Different Interview Types

For Behavioral Interviews

Candidate says:

I led the migration to microservices.

Ask:

Thank you. What changed during the migration that required you to adjust your approach?

If they cannot describe:

New constraints

Resource shifts

Performance surprises

They likely did not lead it.

For System Design

The candidate explains a scaling architecture.

Ask:

Great. At what point did your initial design assumptions stop being true?

These tests:

Real-world constraint handling

Production incident awareness

Contextual reasoning

No AI tutorial includes this.

For Coding Interviews

The candidate produced the correct code, but it seems rehearsed.

Ask:

If the input size increased by 100 times, what is the first thing you would need to rethink?

A real developer will:

Think aloud

Describe complexity tradeoffs

Adjust algorithmic choices

AI-coached responses stall or loop.

The Signal to Look For

The candidate should be able to:

Indicator | Meaning |

|---|---|

Specificity | Shows memory, not script |

Conflicts or mistakes acknowledged | Shows lived experience |

Time-based sequencing | Reflects real involvement |

Can adapt the answer when probed | Shows cognitive ownership |

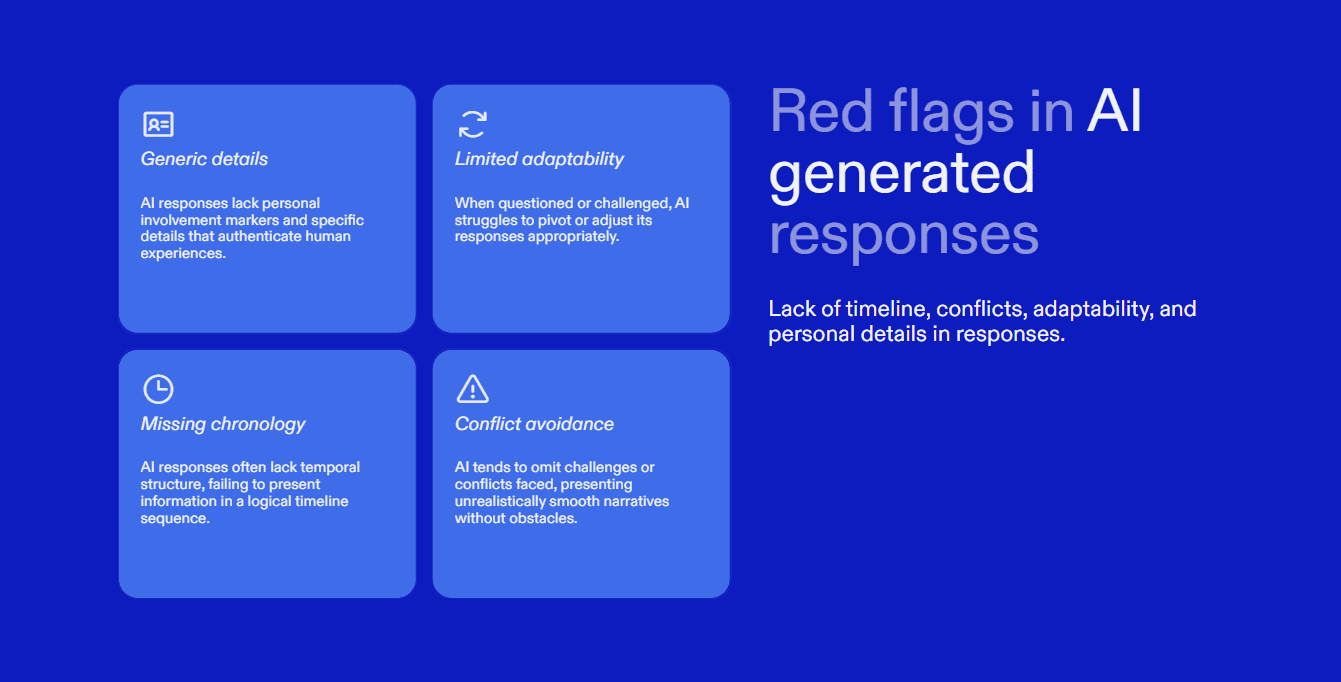

AI answers show:

No timeline

No conflict

No pivot

No personal involvement markers

The Follow-Up Ladder (If Needed)

If they stall, go here:

What was the hardest tradeoff?

If still scripted:

Who pushed back on your approach?

If still generic:

What did you change after seeing the outcome?

Three questions.

AI fails on all three.

The Documentation Template (Audit Safe)

No accusation.

Just signal.

Conclusion

You do not need:

Gotcha questions

Trick puzzles

Aggressive interrogation

You only need:

What changed?

Because real experience changes.

Scripts do not.

AI does not.

Proxies do not.

This technique:

Detects fraud fairly

Reduces bias risk

Improves hiring accuracy

Works in any industry or role level

It is a simple question with strategic impact.