The definitive guide to modern interview fraud, explaining emerging tactics like deepfakes and AI cheating and what employers must do to stay ahead.

Abhishek Kaushik

Dec 15, 2025

TL;DR

Interview fraud is now organized, tech-assisted, and commercially offered.

Candidates can use AI-generated responses, proxy interviewers, and real-time coaching feeds to appear more skilled than they are.

Deepfake tools now allow face, voice, and identity impersonation.

Recruiters need structured detection, identity verification workflows, and audit-ready documentation, not just “intuition”.

This guide includes real examples, red flags, and the full response playbook.

Remote work normalized video interviews.

AI normalized instant answer generation.

Global contracting markets normalized outsourcing of performance.

The result: Interview fraud has evolved from individual cheating to a professional service industry.

This guide explains:

How fraud works in 2026

Why traditional interviewing no longer detects it

Exactly how to verify identity and skill

How to respond legally, ethically, and professionally

A 2025 survey of 3,000 U.S. hiring managers found that 59% suspected a candidate of using AI tools to misrepresent themselves and 23% report losses of more than $50,000 in the past year due to hiring or identity fraud.

The Three Major Types of Interview Fraud in 2026

Type | Description | Risk Level | Most Common In |

|---|---|---|---|

AI-Generated Answer Cheating | Candidate uses AI to answer questions in real time | Medium | Entry and mid-level roles |

Proxy Interviewing | Another person attends and responds on behalf of candidate | High | Technical contractor pipelines |

Deepfake Identity Fraud | AI alters face or voice to impersonate identity | Critical | Roles requiring work eligibility or security clearance |

Read more: Proxy Interviews Explained - How They Work & Why They’re Rising

How AI Is Used to Cheat During Live Interviews

AI can now:

Generate real-time spoken answers

Summarize interview questions instantly

Suggest interview response frameworks

Highlight keywords to include for credibility

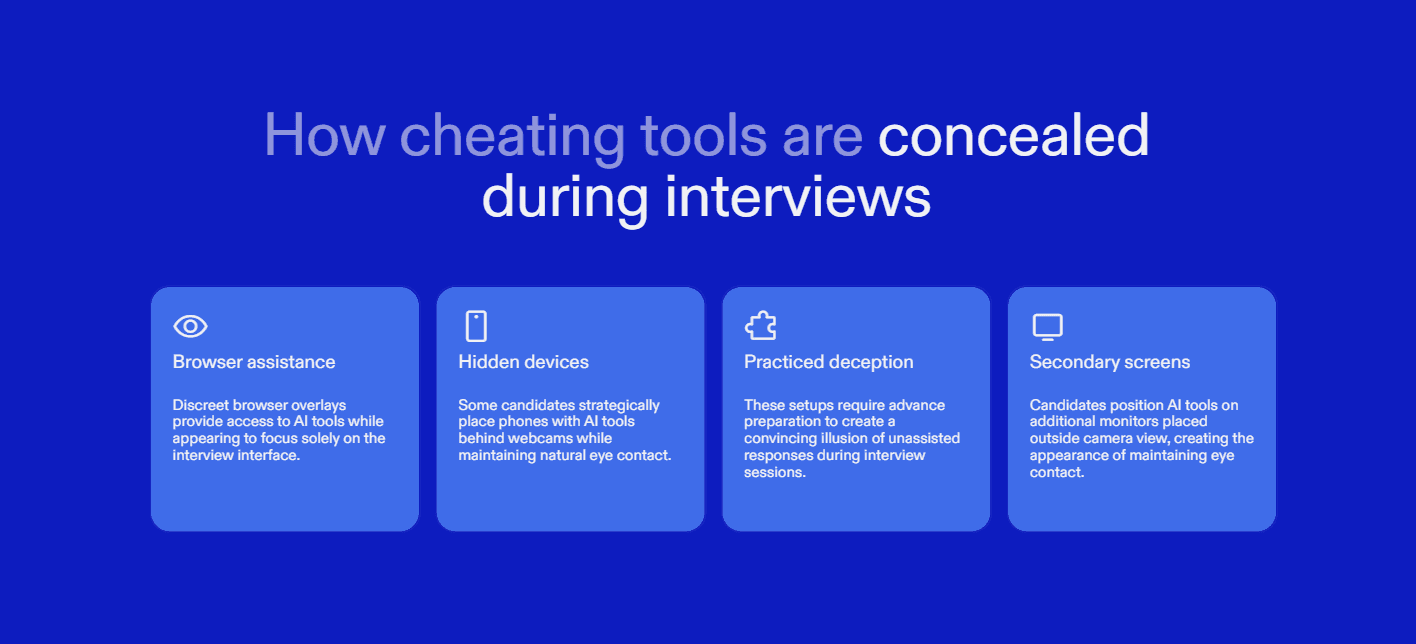

These tools sit:

On a secondary monitor

Inside browser overlays

In a phone held behind the webcam

How Proxy Interview Networks Operate

This is not “one friend helping another”.

This is a marketplace.

Services openly advertise:

“We pass your interview for you”

“Guaranteed placement”

“We handle your technical rounds”

Fraudsters often:

Pass the interview

Hand off work to the real jobholder

Disappear at the first sign of performance evaluation

This is most common in:

Offshore staffing agencies

Contract-to-hire workflows

Rapid backfill roles

Companies without pre-hire identity checks

How Deepfake Identity Fraud Works

Deepfake tools now support:

Real-time face masking

Voice modulation

Synthetic lip-sync correction

This allows:

One person to pretend to be another

Non-authorized individuals to bypass citizenship or background check systems

Read more: Top Deepfake Tools Fraudsters Are Using in 2026

Why Traditional Interviewing Fails in 2026

Traditional interviews assume:

Visual presence = identity

Confident responses = competence

Consistent tone = authenticity

All three can now be fabricated.

A study shows that 74% of hiring managers have encountered AI-assisted applications and 17% reported that candidates used deepfake technology to alter their video interviews.

Recruiters need behavioral validation, not just conversational evaluation.

Detection Playbook

The Reliable Signals That Indicate Interview Fraud

Visual Indicators

Blurring around jawline during movement

Eye contact misalignment

Light reflection mismatch on glasses

Face appears “too smooth” or textureless

Speech Indicators

Tone is uniform and lacks micro-pauses

Answers delivered too quickly and too perfectly

Sudden change in pace during follow-up questions

Cognitive Indicators

Candidate cannot explain why a decision was made

Examples sound memorized, generic, or anonymous

Performance collapses under scenario-based reasoning

[PROOF] Insert comparison transcript: scripted vs authentic problem-solving.

Live Interview Response Strategy

Step 1: Reset the Interview into a Natural Mode

“Let us slow this question down. I would love to hear how you thought about this situation step by step. Take your time.”

AI cannot reproduce incremental reasoning.

Step 2: Test Ownership of Experience

“What part of that project did you personally own? What did you decide that others disagreed with?”

Proxies fail this immediately.

Step 3: Introduce Real-Time Scenario Construction

“Imagine you just joined our team tomorrow. Here is a real situation we are in. How would you begin?”

Deepfake + proxy + scripted answers collapse here.

Step 4: Trigger Identity Verification (Neutral Script)

Use this non-accusatory wording:

“Before moving forward, we need to complete a quick identity verification step. This is standard for consistency across candidates.”

No accusation. No confrontation. Just process.

Email Templates

Follow-Up Email to Candidate

If Fraud Is Confirmed

Short, factual, legally safe, no emotional language.

Internal Documentation Log Template

This protects the company in:

Bias claims

Termination disputes

Vendor audit reviews

Compliance inquiries

A 2025 survey of 3,000 U.S. hiring managers found that 59% suspected a candidate of using AI tools to misrepresent themselves, 31% had interviewed someone later revealed to be using a fake identity.

Conclusion

Interview fraud in 2026 is not a marginal problem.

It is mainstream, scalable, and commercially professionalized.

But it is not unstoppable.

With:

Structured detection signals

Identity verification workflows

Evidence-based scorecards

Consistent documentation

Organizations can eliminate fraud without reducing candidate dignity.

The future of hiring is not suspicion. It is verified authenticity.