Back to all blogs

Learn how to prevent AI cheating in hiring by designing better interviews, spotting assisted behavior, and protecting fair, reliable evaluations.

Abhishek Kaushik

Jan 9, 2026

AI use in hiring is not black and white. Not every use of AI should be treated as cheating, and drawing this line clearly is essential for fair evaluation.

Acceptable AI assistance typically happens outside the interview or assessment itself. Candidates may use AI to prepare for interviews, improve their résumé language, or practice explaining their experience. In these cases, AI acts as a learning aid, not a substitute for the candidate’s own thinking.

AI misuse occurs when tools replace the candidate’s reasoning during evaluation. This includes AI generating answers in real time during interviews, writing code during live technical rounds, or producing responses that the candidate cannot explain or adapt.

A report found that about 20% of professionals admit to having used AI during job interviews, and many employers already consider such use problematic because it undermines true skills assessment. At this point, the interview stops measuring the candidate and starts measuring the tool.

Stages in Hiring Process Vulnerable to AI Misuse

AI misuse can appear at almost every stage of hiring. The risk increases when evaluation relies on polished output instead of real thinking. Understanding how misuse shows up at each stage helps teams interpret signals correctly and design better checks.

1. Online screenings and assessments

Many screening tests are taken remotely and without supervision. This makes them easy to assist with external tools.

How misuse shows up:

Unusually high scores compared to later performance

Fast completion with little variance in answers

Correct answers without clear understanding

Inconsistent results across similar questions

Most screenings focus on correctness, not reasoning. When AI can generate answers instantly, the test stops measuring baseline skill.

Impact on hiring: Screening loses its purpose as a filter. Weak candidates move forward, while strong candidates may not stand out.

2. Take-home assignments

Take-home work is one of the most misused stages. AI can generate complete solutions, documentation, and even explanations.

How misuse shows up:

High-quality submissions with shallow understanding

Clean code or writing that candidates cannot explain

Difficulty answering follow-up questions

Inability to modify or extend the work

Take-home tasks are unsupervised and often predictable. AI performs well when given time and context.

Impact on hiring: Teams treat take-home work as proof of ability. When the work is not the candidate’s own, this creates false confidence and leads to poor on-the-job performance.

3. Live interviews

Live interviews were once considered safe from cheating. That is no longer true.

How misuse shows up:

Delayed responses to simple questions

Polished answers that break under follow-up

Weak explanations of decisions

Trouble adapting when questions change

Some tools can listen to questions and suggest answers in real time. Others assist with coding or explanation in the background.

Impact on hiring: The interview no longer reflects how the candidate thinks. Decision-making, judgment, and adaptability are masked.

4. Trial projects or paid tasks

Trial work is often seen as the closest test of real performance. It is still vulnerable when done asynchronously.

How misuse shows up:

Strong output with little personal reasoning

Generic approaches that lack context

Limited ownership of decisions

Difficulty explaining priorities and trade-offs

When work is done independently, candidates can rely on tools for planning, execution, and refinement.

Impact on hiring: Teams may overestimate readiness and independence. This leads to slower ramp-up and increased management overhead after hiring.

AI misuse does not always mean bad intent. But when multiple stages rely on distorted signals, hiring decisions become unreliable.

Designing Hiring Processes to Reduce AI Advantage

The goal is not to fight AI with rules. The goal is to design interviews and assessments that reward real thinking. When the process measures reasoning, judgment, and adaptability, AI becomes far less useful.

Evaluate reasoning, not just final output

Strong candidates can explain how they think, not just what they produce.

How to do this:

Ask candidates to walk through their approach step by step

Pause them mid-answer and ask why they chose that path

Focus on assumptions, not conclusions

Ask what they would do differently with more time

AI can generate polished answers quickly. It struggles when asked to explain personal reasoning, uncertainty, and judgment in real time.

What to watch for:

Clear structure in thinking

Comfort explaining partial answers

Willingness to admit trade-offs or gaps

Use adaptive and dynamic questions

Static questions are easy to prepare for and easy to assist with. Adaptive questions are not.

How to do this:

Change constraints after the candidate starts answering

Introduce new information mid-problem

Ask follow-up questions based on their specific response

Shift from theory to application without warning

AI tools rely on stable prompts. When the conversation changes direction, assisted answers often break down.

What to watch for:

Ability to adjust quickly

Logical consistency under change

Clear prioritization when conditions shift

Focus on decision-making and trade-offs

Real work is rarely about perfect answers. It is about choosing between imperfect options.

How to do this:

Present two competing solutions and ask which they would choose

Ask what they would optimize for and what they would sacrifice

Discuss real constraints like time, cost, or risk

Ask how they would explain the decision to stakeholders

AI often produces balanced, generic responses. It struggles to reflect personal judgment shaped by experience.

What to watch for:

Strong opinions with clear reasoning

Awareness of consequences

Practical, experience-based thinking

Reduce predictability in assessments

Predictable formats are easier to game. Variety creates stronger signals.

How to do this:

Rotate question formats across interviews

Avoid publicly searchable prompts

Mix discussion, problem-solving, and critique

Use partial or incomplete information

AI performs best when patterns are known. Unpredictable structure forces candidates to rely on their own thinking.

What to watch for:

Comfort with ambiguity

Ability to ask clarifying questions

Logical progression without a script

When interviews reward thinking over polish, AI stops being an advantage. Strong candidates stand out more clearly, and hiring decisions become more reliable.

Role of Proctoring and Detection Tools

Even well-designed interviews break down at scale. When teams run dozens of interviews each week, subtle AI use is easy to miss. Monitoring and detection tools exist to surface what human interviewers cannot reliably track in real time.

The goal is not to catch candidates in a single moment. It is to restore trust in interview signals.

Behavioral and pattern-based detection in real interviews

AI assistance rarely looks like obvious cheating. In practice, it shows up as patterns that repeat across questions.

What hiring teams actually see:

Candidates pausing longer than expected on simple clarifications

Answers that suddenly become clearer or more structured after silence

Strong final responses paired with weak explanations

Difficulty continuing when asked to modify an answer

Attention shifting off-screen during key questions

Individually, these moments seem harmless. Across an interview, they form a pattern.

Why humans miss this: Interviewers are focused on listening, evaluating, and keeping the conversation flowing. Tracking timing, consistency, and behavioral shifts manually is unreliable.

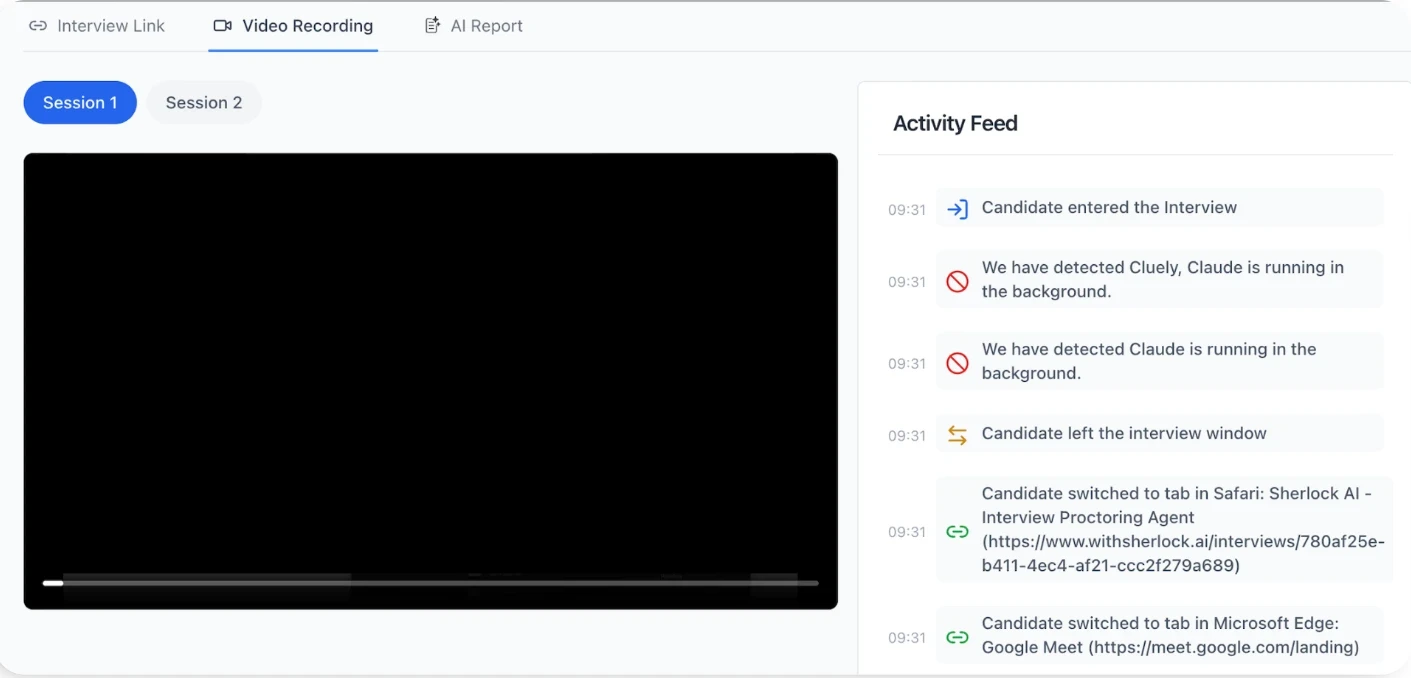

This is where pattern-based systems add value. Tools like Sherlock AI continuously observe timing, response flow, and background activity so interviewers can focus on judgment instead of detection.

Background applications and hidden assistance

One of the hardest challenges today is AI tools running silently in the background.

Real-time answer generators listening to interview audio

Coding assistants active during live technical rounds

Voice-to-text tools feeding prompts to external systems

Screen-based copilots that never appear on camera

These tools are invisible to interviewers.

Sherlock AI addresses this by detecting suspicious background activity alongside behavioral signals. This combination is critical. Behavior alone can be ambiguous. Background context makes patterns clearer.

Balancing accuracy, privacy, and candidate trust

Monitoring only works when candidates feel respected.

What effective teams do:

Explain monitoring upfront in simple language

Focus on behavior and system activity, not personal data

Avoid recording more than necessary

Apply the same standards to every candidate

Sherlock AI is built around this balance. It avoids intrusive controls and focuses on signals relevant to interview integrity.

The limits of hard blocking in real hiring environments

Many companies try to block tabs, lock browsers, or restrict applications.

What happens in practice:

Candidates use secondary laptops, tablets, or phones

Blocking tools create technical failures during interviews

Senior candidates push back on intrusive controls

False positives damage trust and candidate experience

Hard blocking assumes cheating is visible and preventable with restrictions. In reality, modern AI assistance is subtle and external.

Detection tools should guide interviews, not replace them

Good detection tools do not make hiring decisions. They improve interviewer awareness.

How this works in real interviews:

A flagged pause prompts a follow-up question

A reasoning mismatch leads to deeper probing

Repeated signals trigger a second interviewer’s review

Patterns across rounds influence final confidence

Sherlock AI is designed around this workflow. It highlights moments worth examining while keeping humans in control of decisions. This approach avoids over-reliance on automation and reduces the risk of unfair rejection.

Conclusion

AI is now part of the hiring landscape. The real risk is not that candidates use AI, but that hiring teams mistake assisted performance for real capability.

Preventing AI-driven cheating does not require stricter rules or heavier controls. It requires better signal design. Interviews and assessments must reward reasoning, judgment, and adaptability rather than polished output. When processes are designed this way, most AI misuse loses its advantage on its own.

At the same time, process design alone is not enough. In real hiring environments, subtle AI assistance is easy to miss, especially at scale. Monitoring and detection tools provide the visibility interviewers need to interpret performance accurately and ask better follow-up questions.

The strongest hiring systems combine three things: thoughtful interview design, trained human judgment, and behavioral detection that works quietly in the background. This approach protects fairness for honest candidates and restores confidence in hiring decisions.

Explore: How Sherlock AI protects your hiring process from AI fraud.